Snap, one of the world’s top camera software firms, has revealed major updates to its augmented reality (AR) platform, Snap Lens.

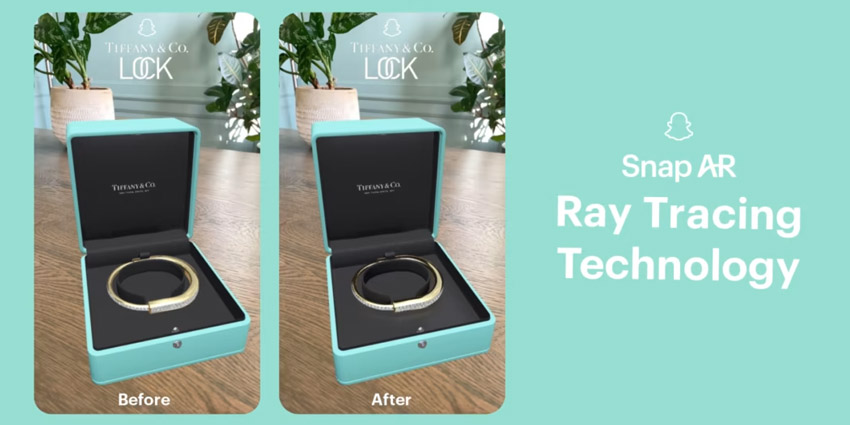

Snap Lens studio incorporated Ray Tracing for developers in April last year to boost immersion and realism for AR content. The latest feature for Snap Lens will provide developers with enhanced light-reflecting tools for virtual content, creating ultra-realistic digital assets.

These will allow users added realism when viewing objects such as jewellery, clothing, machines, furniture, and others. People around the world have used Snap Lens for a number of use cases, including AR clothing try-ons, shopping, filters, leisure, and gaming, among others.

The world’s first adopter of the technology, Tiffany & Co, has begun using Snap’s Ray Tracing with its Snapchat-based Tiffany Lock Lens. Potential customers can use the AR filter to try on the firm’s lock bracelets and purchase items without exiting the application.

House of Tiffany Exhibit at Saatchi Gallery

Shortly after the Snap Lens debut, the Santa Monica-based tech firm partnered with Tiffany & Co to launch the House of Tiffany and a further exhibition, Vision & Virtuosity.

The Saatchi Gallery in London, United Kingdom hosted the event, transforming the building’s exterior into a massive Tiffany jewel.

Snap’s immersive experience leveraged its Custom Landmarker technologies unveiled at the Snap Partner Summit last year. This year’s instalment is set to take place on 19 April.

Announced in August last year, the exhibit allowed users a bespoke, exclusive AR try-on session with its Tiffany app, which uses Snap’s Camera Kit feature.

More on Ray Tracing

According to NVIDIA, ray tracing simulates lighting effects in virtual scenes and objects with cutting-edge rendering tools. These accurately display shadows, lighting, light refraction, and reflections for people using digital assets and immersive content.

Lighting adjusts to the field of view (FoV) and the angle of the viewing position in a headset or device. Depending on the positioning of real-time 3D (RT3D) assets and scenery, ray tracing will change the lighting from their respective sources in the environment.

Ray tracing tools from NVIDIA, Epic Games, and Unity Technologies also use ray tracing processes to boost efficiency rather than monitoring all of the light trajectories from their respective sources.

The most frequent use cases for ray tracing take place in film, television, and video games. Additional sources include the architectural, engineering, and construction (AEC) industries.

Epic Games’ Unreal Engine

The news comes as several major platforms for world-building and graphics have launched tools to enhance realism and immersion for developers.

One such platform, Epic Games’ Unreal Engine 5 (UE5), debuted in April last year, which introduced its Lumen lighting controls feature. The new tool allowed gaming and immersive content developers to adjust lighting conditions for sunlight, doors, and other environmental behaviours.

For developers, this creates a significant qualitative leap in the rendering process. Additional UE5 features included Nanite, which boosted virtual micropolygon geometries for more photorealistic environments, and Virtual Shadow Maps (VSMs) to reduce system resources for shadows on objects.

NVIDIA NeRF

NVIDIA’s Neural Radiance Field (NeRF) photogrammetry tool allows users to convert 2D images to RT3D digital twins for multiple uses. The platform employs artificial intelligence (AI) and machine learning (ML) to develop on-the-fly RT3D scans and digital twins for locations and objects.

Additionally, it instantly captures environmental components such as lighting, shadows, and perspective for streamlined production pipelines of hyperrealistic virtual objects.

Users can leverage NVIDIA’s Omniverse platform to incorporate captured assets in immersive environments or external production pipelines. Professionals across robotics, manufacturing, AEC, gaming, automotive, and other industries can leverage the new technologies.