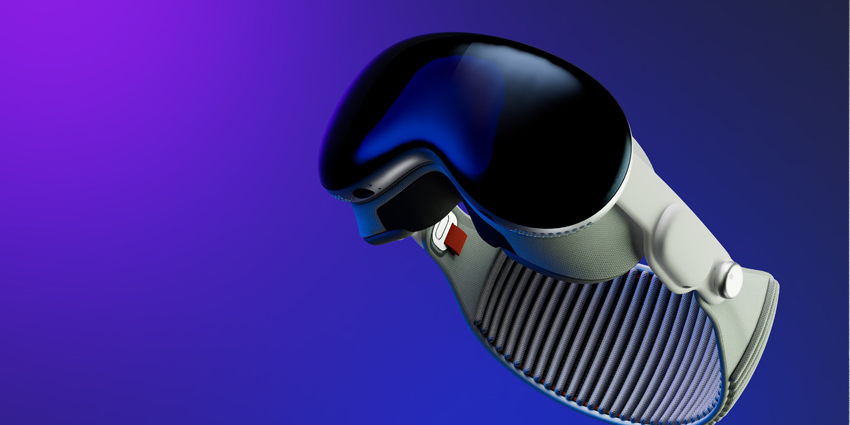

Apple’s Vision Pro device is coming in under a year if predictions are correct.

Costing roughly $3499 and debuting in early 2024, the Vision Pro is promising to bring spatial computing and AR workspaces to the mainstream.

An infinite digital canvas is how Apple sees its immersive product. Apple invites users to leverage Vision Pro as a tool to enhance their personal or professional workflows.

The upcoming device contains M2 and R1 processing chips, which Apple designed for its first-party hardware.

Moreover, the Vision Pro has a trove of hardware features, including a 4096 x 5464 pixels micro-OLED resolution, eye/hand tracking, voice recognition, spatial audio, high-dynamic range (HDR), wide colour gamut (WCG), 2-hour battery life, iPhone/iPad/Mac synchronization, a light seal, a LiDAR scanner, and a TrueDepth camera.

The device’s high price point may be a tough pill to swallow for many, with a list of competitors offering immersive hardware for a fraction of the price; time will tell if Apple’s cult of techno-personality and its history of application development support will allow the device to shine through until more commercially available successors are available-much like the initial pricing transition of the iPhone.

Introducing VisionOS

Apple has a storied history of establishing a solid operating system to support its range of devices, from its smartwatch, TV, and phone products – Vision Pro is no different.

visionOS is the framework empowering Apple’s infinite canvas. Coming later this month, according to Apple, visionOS provides the digital foundations for a spatial computing environment as well as its integrated applications and features.

The OS is obviously in its early days, and most of the device’s supported applications are first-party or well-established services from firms like Zoom and Microsoft that Apple is porting to visionOS. Some integrated applications are marking a first immersive launch, while others have already established an immersive base following periods operating on pre-existing XR devices.

To help introduce immersive developers to its XR vision, Apple is breaking down its framework into three key developmental considerations:

Windows

The most traditional computing element of Apple’s XR device. Windows represents the 2D user interface, enabling users to navigate and access 3D immersive applications.

Like an iPhone home screen, Vision Pro windows frame user applications neatly together, ready for operators to choose and manage their applications using the device’s body tracking inputs.

Volumes

Moreover, volumes are Vision Pro’s RT3D immersive experiences. After negativing Vision Pro windows, a user can choose their immersive application and display it as an AR visualization overlaid on their real-world environment.

Volume AR visualizations are viewable from any angle. Users can play immersive interactive experiences and digital assets as a volume, which they can interact with using hand/body/eye movements.

Volume-based immersive applications display as partially or fully immersive for different use cases. Allowing Vision Pro operators to engage with a Vision Pro service alongside other applications or individually as an isolated programme for total immersion.

Spaces

Spaces represent the spatial computing environment an immersive volume or application exists within. As mentioned, a shared and entire space allows users to experience a Vision Pro service with varying immersion factors.

Shared Space allows users to interact with XR content alongside other applications, like a desktop with various applications open.

For example, when users choose an entire space to experience their volume content, they go from an AR to an MR space for total immersion. According to Apple, developers can design “portals” that transition users from AR space to an “unbounded” immersive MR environment.

Deep XR Framework Empowering Apple’s Immersive Future

Keen iOS and immersive technology observers will recognize some integrated XR SDKs powering the Vision Pro. Apple includes various XR frameworks, and some are already present in its products.

However, while some of its framework technology is familiar, Apple appears to be updating services like ARKit and RealityKit to suit an XR headset over previously integrated, immersive smartphone features.

SwiftUI

Apple claims that SwiftUI is the “best way” to build new immersive applications for the Vision Pro and pre-existing ports of iPadOS or iOS services to the device.

SwiftUI provides developers with the tools to design depth, gestures, effects, and immersive scene considerations for a Vision Pro application.

Moreover, SwiftUI integrates the accompanying RealityKit framework for deploying reactive immersive UIs for an RT3D XR application.

RealityKit

RealityKit is the key for XR developers to design 3D content, animations, and visual effects for their Vision Pro applications.

The kit is Apple’s core RT3D rendering engine that will power most of the platform’s immersive visualizations. The tools also provide a streamlined development process with automated tools to create physical lighting conditions, cast shadows, design immersive portals, and deploy visual effects.

Additionally, Apple is integrating MaterialX into the RealityKit offering. The additional service allows platform developers to author their own materials, including surface and geometry shaders.

ARKit

ARKit is a well-established tool in the XR developer space. The service helps devices, including iPhones, iPads, and now Vision Pro hardware, detect the environments around a user to ensure accurate placements and interactions.

The kit allows for shared volumes to display clearly alongside neighbouring immersive applications. On the other hand, fully immersive volumes can take advantage of ARKit features like plane estimation, scene reconstruction, image anchoring, world tracking, and skeletal hand tracking for a secure immersive experience.

Apple states that its ARKit framework can allow developers to create immersive experiences which react to the real world, such as bouncing a virtual ball against a real wall.

Accessibility

An increasing consideration for the XR industry is accessibility and inclusion. XR devices hold a unique space as the hardware allows a large spectrum of individuals to enjoy, and the Vision Pro is no different.

Notably, due to its controller-less interface, the Vision Pro gives users a selection of options to interact with their environment using their bodies, inviting a broader group to create for and use the device.

Apple also supports the XR accessibility initiative with integrated tools like Pointer Control to create accessible, immersive applications.

Xcode

Apple is also integrating XCode, which allows developers to test a visionOS SDK application within a simulated environment, including various room layouts and lighting conditions.

XCode also allows developers to digitally test collisions, occlusions, and scene understanding without directly using the firm’s hardware.

Reality Composer Pro

Apple is also re-introducing Reality Composer for its Vision Pro development suite. The firm updated the service to accommodate the hardware’s upcoming developers to manage immersive assets for a Vision Pro application, such as 3D models, digital twins, materials, and sounds.

Additionally, Reality Composer Pro integrates closely with Xcode to enable developers to test their assets in a simulated space – inviting XR developers to start creating ahead of the product’s release.

Unity

Tried and tested; that’s the best way to describe Unity. The long-standing RT3D engine is already an incredibly familiar tool for AR/VR/MR content developers. The engine powers many enterprise and consumer-facing applications; now, Apple is supporting the use of the service for its device.

Vision Pro developers can leverage Unity’s RT3D authoring tools to create new services or port over pre-existing Unity Projects – a huge porting opportunity for the vast amount of Unity-based immersive applications already available on the market.

Will the Vision Pro See Developer Success?

The list of Vision Pro developer tools is vast. Apple clearly intends to provide a developer space for creating a solid application ecosystem. Although, if developers are ready to take the monetary dive will remain unclear until the device launches next year.

However, it is fair to note that while the price point is high, Apple is attempting to create a resource-rich space to help ease developers towards developing for the hardware.

With developmental simulation tools like Xcode and Reality Composer Pro, developers who feel they cannot access the Vison Pro device due to economic or regional factors could leverage the SDK suite to familiarise themselves with the developmental space.