Tobii, a global leader in eye-tracking, announced earlier this year that it was in talks with Sony to include its tech in the upcoming PlayStation VR2. Now the company has confirmed its eye-tracking is integrated in PSVR 2.

Update (July 1st, 2022): Tobii has officially announced it is a key manufacturer of PSVR 2’s eye-tracking tech. The company says in a press statement that it will receive upfront revenue as a part of this deal starting in 2022 and revenue from this deal is expected to represent more than 10% of Tobii’s revenue in 2022.

“PlayStation VR2 establishes a new baseline for immersive virtual reality (VR) entertainment and will enable millions of users across the world to experience the power of eye tracking,” said Anand Srivatsa, Tobii CEO. “Our partnership with Sony Interactive Entertainment (SIE) is continued validation of Tobii’s world-leading technology capabilities to deliver cutting-edge solutions at mass-market scale.”

The original article follows below:

Original Article (February 7th, 2022): Tobii released a short press statement today confirming that negotiations are ongoing, additionally noting that it’s “not commenting on the financial impact of the deal at this time.”

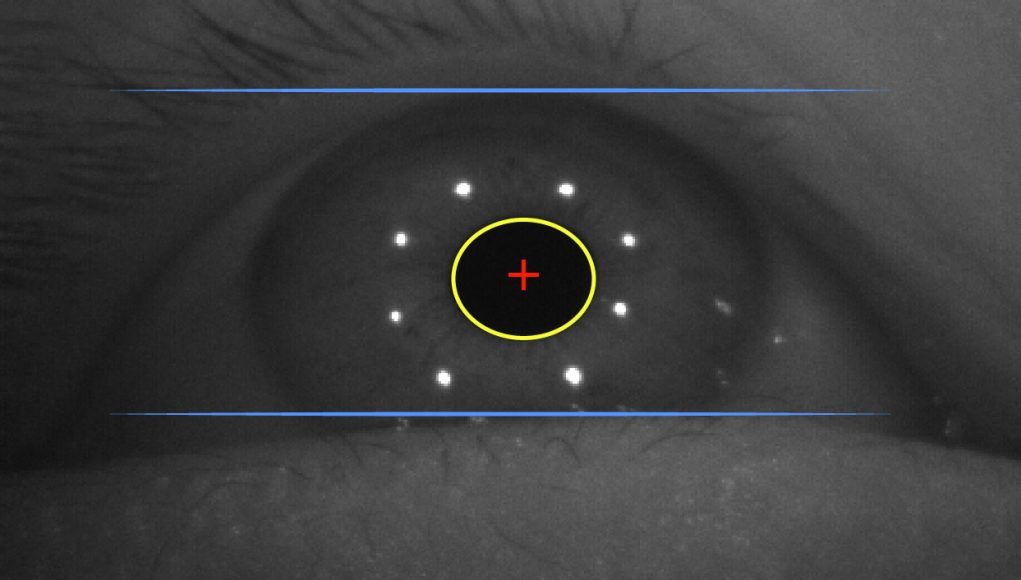

It was first revealed that Sony would include eye-tracking in PSVR 2 back in May 2021, with the mention that it will provide foveated rendering for the next-gen VR headset. Foveated rendering allows the headset to render scenes in high detail exactly where you’re looking and not in your peripheral. That essentially lets PSVR 2 save precious compute power for more and better things.

Founded in 2001, Tobii has become well known in the industry for its eye-tracking hardware and software stacks. The Sweden-based firm has partnered with VR headset makers over the years and can be found in a number of devices, such as HTC Vive Pro Eye, HP Reverb G2 Omnicept Edition, Pico Neo 2 Eye, Pico Neo 3 Pro Eye, and a number of Qualcomm VRDK reference designs.

It’s still unclear when PSVR 2 is slated to arrive, although it may be positioned to become the first true commercial VR headset to feature eye-tracking—that’s if PSVR 2 isn’t beaten out by Project Cambria, the rumored ‘Quest Pro’ headset from Meta which is also said to include face and eye-tracking.