New research from Kent State University and Meta Reality Labs has demonstrated large dynamic focus liquid crystal lenses which could be used to create varifocal VR headsets.

Vergence-Accommodation Conflict in a Nutshell

In the VR R&D space, one of the hot topics is finding a practical solution for the so-called vergence-accommodation conflict (VAC). All consumer VR headsets on the market to date render an image using stereoscopy which creates 3D imagery that supports the vergence reflex of pair of eyes (when they converge on objects to form a stereo image), but not the accommodation reflex of an individual eye (when the lens of the eye changes shape to focus light at different depths).

In the real world, these two reflexes always work in tandem, but in a VR they become disconnected because the eyes continue to converge where needed, but their accomodation remains static because the light is all coming from the same distance (the display). Researchers in the field say VAC can cause eye strain, make it difficult to focus on close imagery, and may even limit visual immersion.

Seeking a Solution

There have been plenty of experiments with technologies that could be used in varifocal headsets that correctly support both vergence & accommodation, for instance holographic displays and multiple focal planes. But it seems none have cracked the code on a practical, cost effective, and mass producible solution to solve VAC.

Another potential solution to VAC is dynamic focus liquid crystal (LC) lenses which can change their focal length as their voltage is adjusted. According to a Kent State University graduate student project with funding and participation from Meta Reality Labs, such lenses have been demonstrated previously, but mostly in very small sizes because the switching time (how quickly focus can be changed) significantly slows down as size increases.

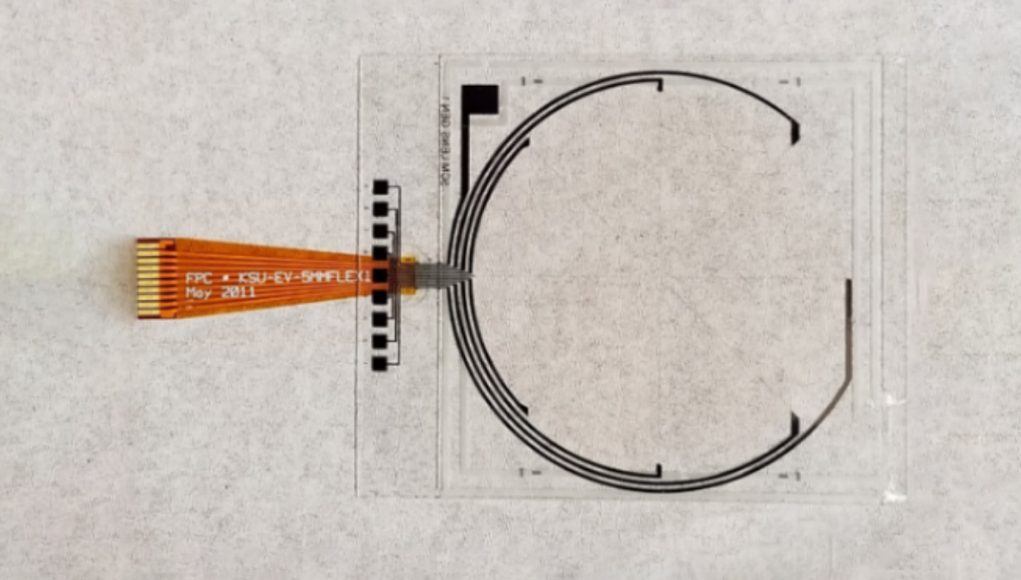

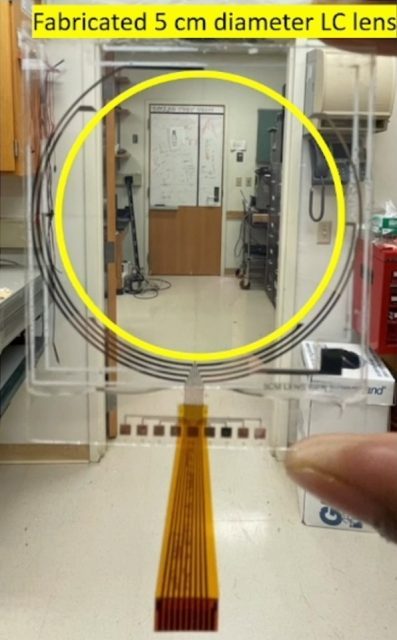

To reach the size of dynamic focus lens that you’d want if you were to build it into a contemporary VR headset—while keeping switching time low enough—the researchers have devised a large dynamic focus LC lens with a series of ‘phase resets’, which they compare to the rings used in a Fresnel lens. Instead of segmenting the lens in order to reduce its width (as with Fresnel), the phase reset segments are powered separately from one another so the liquid crystals within each segment can still switch quickly enough to be practical for use in a varifocal headset.

A Large, Experimental Lens

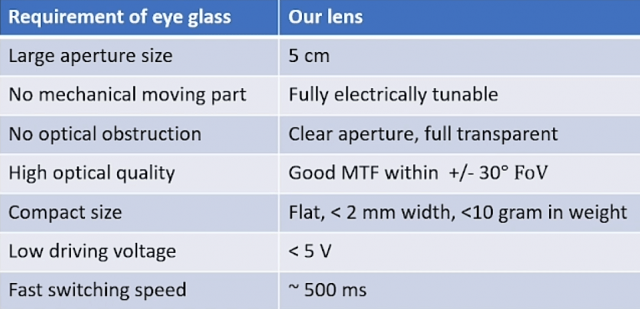

In new research presented at the SID Display Week 2022 conference, the researchers characterized a 5cm dynamic focus LC lens to measure its capabilities and identify strengths and weaknesses.

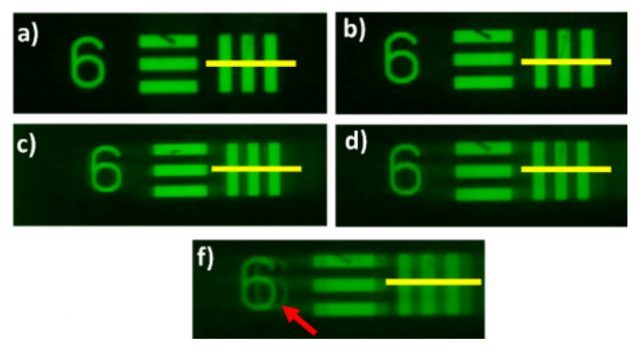

On the ‘strengths’ side, the researchers show the dynamic focus lens achieves high image quality toward the center of the lens while supporting a dynamic focus range from -0.80 D to +0.80 D and a sub-500ms switching speed.

For reference, in a 90Hz headset a new frame is shown to the user every 11ms (90 times per second), while a 500ms switching time is the equivalent of 2Hz (two times per second). While that’s much slower than the framerate of the headset, it may be within the practical speed when considering the rate at which the eye can adjust to a new focal distance. Further, the researchers say the switching time can be increased by stacking multiple lenses.

On the ‘weaknesses’ side, the researchers find that the dynamic focus LC lens suffers from a reduction in image quality as the view approaches the edge of the lens due to the phase reset segments—similar in concept to the light scattering due to the ridges in a Fresnel lens. The presented work also explores a masking technique designed to reduce these artifacts.

Ultimately, the researchers conclude, the experimental dynamic focus LC lens offers “possibly acceptable [image quality] values […] within a gaze angle of about 30°,” which is fairly similar to the image quality falloff of many VR headsets with Fresnel optics today.

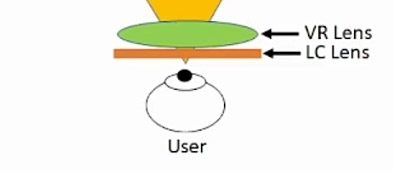

To actually build a varifocal headset from this technology, the researchers say the dynamic focus LC lens would be used in conjunction with a traditional lens to achieve the optical pipeline needed in a VR headset. Precise eye-tracking is also necessary so the system knows where the user is looking and thus how to adjust the focus of the lens correctly for that depth.

The work in this paper presents measurement methods and benchmarks showing the performance of the lens which future researchers can use to test their own work against or identify improvements that could be made to the demonstrated design.

The work in this paper presents measurement methods and benchmarks showing the performance of the lens which future researchers can use to test their own work against or identify improvements that could be made to the demonstrated design.

The full paper has not yet been published, but it was presented by its lead author, Amit Kumar Bhowmick at SID Display Week 2022, and further credits Afsoon Jamali, Douglas Bryant, Sandro Pintz, and Philip J Bos, between Kent State University and Meta Reality Labs.