What does not convince me of the eye-and-hand interface of the Apple Vision Pro

The Apple Vision Pro is the device of the moment: since the preorders have been opened on January, 19th, my social media feed is full of posts that just talk about this device. And this is for a good reason: Apple entering the field is for sure a defining moment for the immersive reality space.

One of the things that are talked about the most about the Vision Pro is the fact that it features no controllers and the interface works only with the eyes and the hands. Apple has spent a lot of time fine-tuning these natural interactions, which many competitors are already thinking about copying. But is it a good idea? Well, mostly yes but sometimes no…

The Vision Pro is the first version of a product

Before entering into the details of this post, let me clarify a few things. First of all, I know that the Vision Pro is the first product of the Vision line and that Apple is still “learning”, so it’s fine that it is not perfect. Second, I appreciate that Apple is trying to offer an innovative interface that feels totally natural to the user. And they already did a great work in this sense. And third, I know that doing this is a very hard task: usually to make life easier for the users, we developers and designers have to have a very complex life ourselves! Fourth, I have not tried the Vision Pro yet, but I’ve read countless reviews about it, and I started to see a clear pattern in what people indicate as the pros and cons of this device. Still, something that I say may not be fully accurate because I’ve not tried the device yet.

All of this is to say that whatever is in this post must be taken as constructive criticism, not as a way to turn down what is for sure a very interesting device I’m myself excited about.

The mouse, the highlander of the controllers

Before speaking about the futuristic Apple Vision Pro, let me talk about something very common: our dear mouse.

The mouse is one of the most simple controllers and we use it every day when we are at our computer. It is a very old device: it was conceived during the ’60s and started to be used together with computers in the ’70s, until it became widespread with the diffusion of personal computers. From the ’70s to now a lot of changed in the IT field, we went from workstations to tiny laptops, but the mouse is still there, with more or less the same form factor, just some incremental evolutions (e.g. improvement of the ergonomics and the addition of the wheel). We’ve gone through science fiction movies predicting that we would have interacted with computers with our minds, our hands, or holographic devices… but we are still here with this old tech in our hands. How is it possible?

Well, well, if you think about it, the mouse has a lot of advantages:

- It is cheap

- It is easy to understand and use

- It makes your hand stay at rest because it lies on the table

- It is clear when you want to give an input: you have to actively click, or scroll

- It is very precise in tracking the pointer

- It is very reliable: unless your mouse is broken or you are on a non-textured surface, every click is detected, and every movement is detected

The mouse just works. And it is incredibly reliable, so it is perfect as input. No one wants to work every day with a faulty input: as Michael Abrash said during one of the Oculus Connects, no one would work with a mouse that works only 90% of the time… imagine if every ten clicks, one would be misdetected… you would probably throw your mouse out of the window. 90% is a very high percentage, but still, for input detection is not enough.

The mouse is my benchmark for input: the moment some XR input mechanism can be comparable with the mouse, we will have found the right control scheme for headsets.

Meet Apple Vision Pro’s natural interface

As you already know, Apple Vision Pro completely relies on eye and hand tracking. Apple loves to simplify things and make people interact naturally with technology, so making people use their hands as they use them every day, instead of controllers, seemed a really good idea. I think this choice has been taken because Apple’s end goal is to create AR glasses, which are the technology that people will wear every day and every time, and it is not possible that people will take controllers also with them. If something must work in the streets, it should rely just on the glass itself and the user, so it makes sense to me that Apple is already experimenting with this kind of input. It would have made no sense to release a device with a technology that they already know that will be removed in future iterations.

The idea at the base of their interface is that you look with your eyes at the element you want to interact with, and then you perform the interaction with your hands. So you look at a photo, and then pinching with your fingers you can zoom it; or you look at a menu item and by air-tapping you click to activate it. On paper, this is incredibly cool, because we tend to look at the objects we interact with, so using the eyes as an “intention indicator” and the hands as the clear input action seems the perfect idea. And in fact, all the people who had a scripted 30-minute demo of the Apple Vision Pro before the launch all came out excited about it. Because it mostly works.

But now that the device is in the wild, many reviewers have reported that the interface “most of the times work”, but in some cases it can also be frustrating. As the Verge titled its review “The Apple Vision Pro is magic until it’s not”. Let’s see what are the problems that sometimes arise.

Eye and hand tracking must be more reliable

Eye and hand tracking are cool, but the technology behind it is not fully ripe. Apple worked hard to guarantee the highest tracking quality possible, but according to reviewers, there are still times when it does not work. Do you remember when I said that no one would use a mouse that is not reliable 100% of the time? Well, we are still in the case of the faulty mouse.

But if problems with hand tracking are more acceptable, because we are used to having failures in using our hands even in the real world (e.g. we fail at grabbing something, or at pointing to an object), the failures in eye tracking sound a bit strange to our brain, because we have no way to “look better” at an object if the eye tracking fails in detecting where you are looking at. I have experimented with this myself in all my tests with eye tracking: if I’m looking at an object and the system does not highlight it, what am I supposed to do? Look at it with more intensity?

In the end, you learn that you should move physically a bit and try to put the object you are looking at more in front of you (eye tracking performances usually degrade when you look at the periphery of your vision) or you have to look at another direction and then aim again at the object, but this is totally awkward and not in line with the spirit of a natural interface.

Eyes shouldn’t be used as input

Eyes are the pointers of the Apple Vision Pro.

I keep repeating this every time I try an eye-tracked interface: eyes are NOT an active input mechanism. This is something that should be clear to everyone. Eyes have behaviors that are only partially consciously driven: most of the time we move them automatically, without even noticing. For instance, eyes perform automatic fast saccades movement to explore the surroundings. Eyes are always in exploration mode, unless they are fixated on a specific element, like in my case now, with my eyes staring at my screen to write this post. Eyes are also connected to some brain activities: if I ask you now to think about what you ate yesterday for breakfast, probably your eyes will move upwards to support the memory access of your brain. These are all behaviors that are encoded in our brains since thousands of years ago, and exactly as Apple can not defy the laws of physics and so had to deliver a heavy headset, it can not defy the laws of neurology, so can not expect us to use the eyes as it wants us to do.

The brain is smart enough to adapt to every situation, so of course can learn the skill of using the eyes as input, but it is not its natural way of working. The moment that Apple requires you to actively look at something to interact with it, it is forcing the user to use the eyes as a pointing mechanism, which is wrong. Because eyes are meant to explore around, and not to fixate on elements to support a clicking mechanism.

I know, I know… now most of you would say: but Tony, actually Apple is smart because it just exploits the fact that you naturally look at the objects you want to interact with, so there is no use of the eyes as an active pointer. Well…

The assumption that eyes look at what you are interacting with is sometimes flawed

The key of this article is the repetition of adverbs like “usually”, “most often”, “naturally”, and so on. Because as I’ve said in the beginning, we want an input mechanism that works 99.999% of the time, not 90% of the time. We want it to pass “the mouse test” (maybe I should trademark this name).

Is it true that USUALLY, we look at the objects we are interacting with, on a computer and in real life? Yes. Is it true that we ALWAYS do that? Absolutely no. When you drive, you move hands and feet on various elements of the car while usually looking in front of you. I’m typing this article while looking at the screen, while my hands are typing on the keyboard… I spent so much time learning how to typewrite and for sure I do not want to go back. When I cook, I look at the pan I’m putting elements in, but I also keep glancing around to check if the other food I’m cooking is fine. And so on. Our brain coordinates the input and output of our eyes with our limbs to reach a certain goal, and may do that in different ways, sometimes making them focus on the same object, sometimes not.

You may argue that these examples do not concern a digital interface, but manual work, so they do not concern the Vision Pro. Well, in one of the reviews I’ve read, the user was trying to move an element from a certain position to another one in a certain application. The application was expecting that the eyes would keep looking at the object being moved because that was the subject of the interaction… but actually doing a movement, our eyes tend to look at the destination, not at the source, but as soon as the eyes of the user stopped looking at the element, the movement was interrupted, with great frustration of the reviewer. So, also on the Vision Pro there are cases where you would like your eyes to do something else.

The result of forcing your eyes to work as an input mechanism is a sense of fatigue, that in fact some people that tried the Vision Pro are reporting.

The Mida’s touch

Eye tracking and hand tracking also suffer from the Mida’s touch problem… whatever you do may be interpreted as input. There is no way to clearly start and stop the input detection, they are always on. And this may lead to unexpected issues.

This is something I live every day with my Quest 3. Sometimes when I’m downloading a big application on my Quest, I put the device on my forehead, and while I’m waiting for the download to finish, I check my emails on my laptop. But the movement of my hands on the keyboard is detected by the Quest 3 as inputs for it, so when I put the headset back on, I discover I have accidentally opened the browser, bought a Peppa Pig game on the Quest Store, set up my Operating system language to Arabic and sent a dick pick to my grandmother.

In a review of the Vision Pro (maybe the one by WSJ’s Johanna Stern), she clearly says that sometimes she was just moving her hands in front of the device to do something else, and the device detected that as input. Imagine how this can be dangerous for us Italians who make a lot of hand gestures while we speak!

Hand tracking has its share of problems, too

Even just hand tracking has its problems of its own that we know well. People who tried to use the virtual keyboard found it frustrating for something more than a simple input. We all know that without perfect finger tracking and haptic feedback, having a good virtual keyboard is complicated.

Then hand tracking is considered a “natural” form of input, but this is true only if you implement natural interactions. For instance, grabbing or poking an objects without using the controllers is absolutely great. But what if you want to return back to main menu or you want to teleport forward? Well, the developer of the experience (or of the OS) should imagine some gestures to trigger those actions. Those gestures are totally unnatural (I do not face my palm up and pinch the fingers to move in real life… I just move my feet) and are hard to remember (every time I have to open the Quest menu with my hands I have to stop a second and remember which was the right gesture). So hand tracking can be very natural or very unnatural depending on the use case.

The mouse won the mouse test with Vision Pro

In the end, it was only the mouse passing the “Mouse test” with the Vision Pro. Many reviewers managed to work very well with the Apple Vision Pro the moment they connected it with a mouse and a keyboard or they connected it directly to a Mac laptop. Many journalists wrote posts about the Vision Pro while wearing the device, and they did it with the plain old mouse and keyboard. The super “Spatial Computing” still relies on a device from the 60s. Will Apple rebrand the mouse as the “Spatial hand pointer” and sell it at $150 to not admit it has a problem?

So what is the input of the future of XR?

I have no idea what should be then the interaction system of the future… if I had an exact answer for this, I would be a billionaire. Probably eyes, voice, and hand tracking are what we need to use our future AR glasses from everywhere we will be, but they need to be better than now

First of all the tracking performances should improve and become close to perfect. This is the easy part because it’s just a matter of time before it happens. Then I still think that for some specific use cases, we will use controllers. Like, we return home and we wear some controllers to play for instance action games. Controllers are in my opinion much more suitable for walking and shooting in games because they offer a better control scheme, and also they provide the haptics sensation that is very satisfying when you are shooting.

I also believe that eyes should be left more free to explore in the XR interface. There should be some magic that understands what the eyes are looking at and why: most of the times the eyes can be a hint of what the user wants to interact with, other times of the end goal of an interaction (e.g. when throwing you look at the object you want to hit), other times they are just wandering around. Using some neuroscience principles mixed with some AI algorithms, gathering data across many users, may be the “magic” that we need to make better use of our eyes and hand interactions.

I remember that someone working at Apple mentioned he was working on some neuroscience magic, so probably this is what Apple is already trying to do, and maybe it will refine the AI models with the data taken from the first adopters.

If we could put some BCI sensor somewhere (maybe in the frames of the glasses?), maybe also some brain waves could be put in the mix of data to understand the intention of the user.

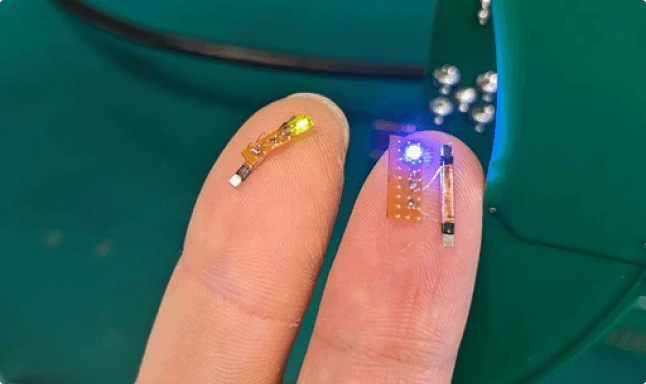

Also, the addition of other non-invasive devices may help. I’m very intrigued by the possibility of using a ring as an input for XR glasses… it’s non-obtrusive, can be taken around, and can be used to give clear directions to the device. For instance, it could be used to manually scroll between options, or even to activate/deactivate eye-hand input and avoid The Mida’s touch problem. The smartwatch may have a similar function, too, but I still prefer the ring because you can access it by moving only the fingers.

I’m curious to see what will be the next evolution of these natural interfaces. As I’ve said, I’m pretty excited by the work that Apple did and I can not wait to try it. But at the same time, I think it’s a starting point and not the end goal. I hope that other companies instead of just copying Apple, will try to improve its system.

(Header image by Apple)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.