Back in June Meta revealed its latest XR R&D efforts through a handful of different prototypes designed to prove out various concepts. The next step, the company says, is to bring it all together into a single package that could one day reach real users.

If you spend time keeping up with the latest XR research (as we like to do), you’ll know the vast majority of the work being done is about proving that something is possible, but not necessarily about whether or not it’s viable as tech that could be made into a real product—where challenges like cost, manufacturing, and durability can nix even the most innovative technologies.

Take, for instance, the ‘Starburst’ HDR VR headset prototype that Meta recently revealed. The goal of the research was to simply build something that worked so the team could quantify the impact of 20,000 nit HDR on immersion. But from a market-viability standpoint, the tech used in the prototype is far too big, draws too much power, and gets way too hot to be built into a real product without completely changing the underlying architecture.

And that’s the case for many of Meta’s R&D prototypes—they are designed to demonstrate a concept, but they aren’t necessarily designed to be market-viable.

But Meta’s next prototype, the company said alongside the reveal of its latest R&D in June, is an effort not just to demonstrate a concept, but to bring much of the company’s latest research together into a single headset—and in an architecture that could actually form the foundation of future products.

That doesn’t mean it would be cheap and it doesn’t mean it would easy, but if it comes together as the company hopes, it will “be a game changer for the VR visual experience,” says Michael Abrash, Chief Scientist at Meta Reality Labs.

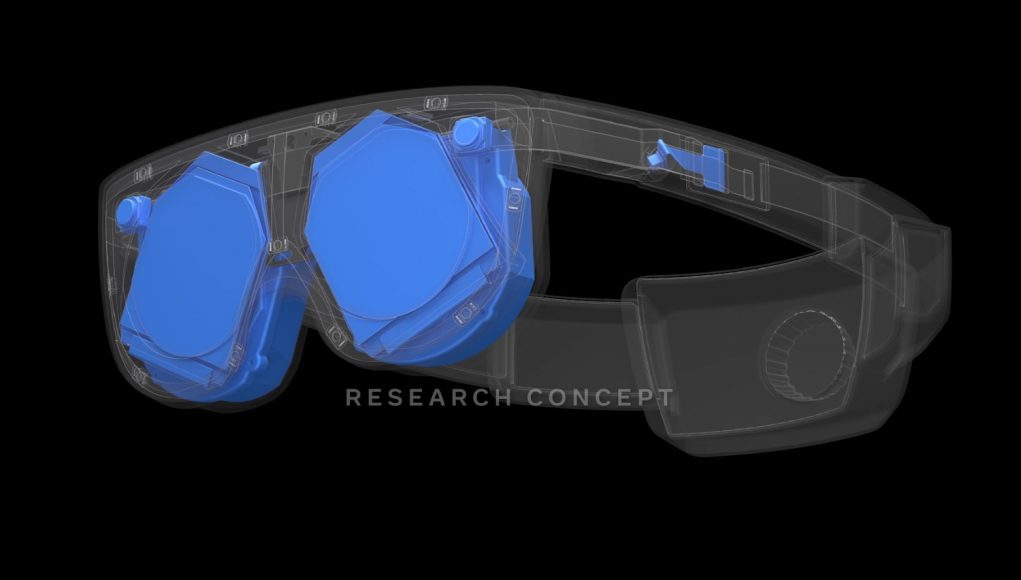

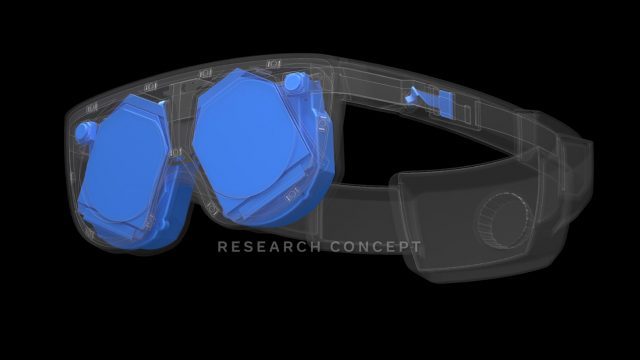

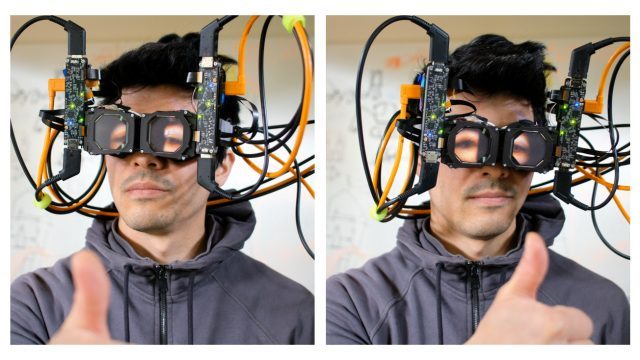

The prototype concept is called Mirror Lake, and while Meta has been careful to say that it hasn’t even been built yet, the company says its goal is to pack many of its latest innovations into this single system.

According to Douglas Lanman, Director of Display Systems Research at Meta Reality Labs, Mirror Lake will include electronic varifocal lenses (including prescription correction), multi-view eye-tracking, holographic pancake lenses, advanced passthrough, reverse passthrough, and a laser backlight in a goggles-like form-factor. Let’s break those down briefly one-by-one.

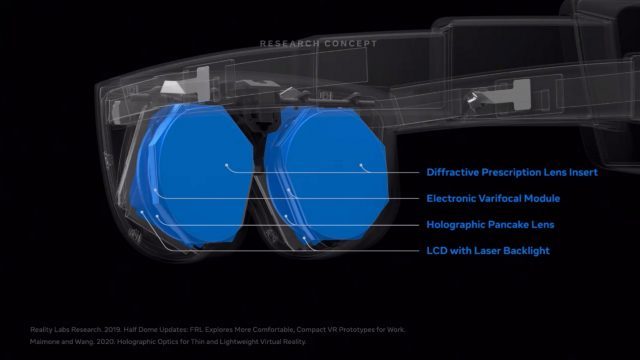

Electronic Varifocal Lenses

Electronic varifocal lenses would allow the headset to show correct depth to each eye individually and also eliminates the need for eyeglasses. This would not only make the headset more immersive, but also more comfortable too, as it would fix the longstanding ‘vergence-accommodation conflict’ that afflicts most headsets.

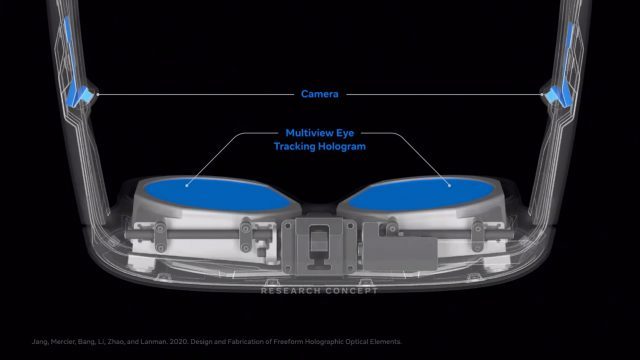

Multi-view Eye-tracking

Multi-view eye-tracking could increase the accuracy of eye-tracking (which is a keystone for other features like dynamic distortion correction) by giving the system more views of the user’s eye. Most eye-tracking headsets today have a camera inside the lens at a sharp angle that watches the user’s eye to estimate its movement. But that sharp angle makes it harder to get an accurate estimate. Meta says Mirror Lake could include an additional eye-tracking camera in each strut of the headstrap near the user’s temple. This camera would get a better view of the user’s eye by looking at a reflection from a holographic film embedded on the headset’s lenses.

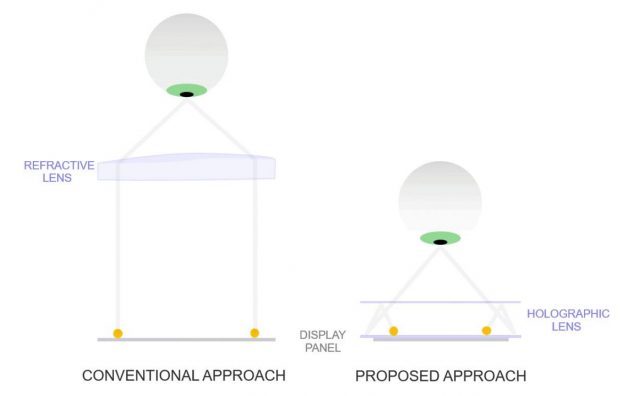

Holographic Pancake Lenses

Holographic pancake lenses are novel lenses that are thinner and lighter while allowing the user’s eye to be closer to the lens, reducing headset bulk considerably.

Advanced Passthrough

Advanced passthrough features will allow a sharper and more realistic view through the headset for mixed reality capabilities. Lanman didn’t go into much detail about a so-called “neural passthrough camera” proposed for Mirror Lake, but ostensibly this will be akin to a next-gen version of the Passthrough+ feature on Quest 2 which aims for accurate depth representation and low latency.

Reverse Passthrough

Reverse passthrough is a somewhat funky technology that projects the user’s eyes onto the outside of the headset for others to see. Since eyes are such an important part of communication, this feature aims to make it less weird to have a conversation with someone who is wearing a headset.

Laser Backlight

Laser backlighting would allow the headset to display a wider range of colors to more accurately represent what a user would see in real life. It could also make for a brighter display with a wider dynamic range.

– – — – –

Meta has demonstrated many of these technologies independently in various prototypes, but Lanman says the goal is for Mirror Lake to be built around a “practical architecture;” meaning something that’s viable outside of a lab setting—something that could actually form the basis of a real product.

Abrash, Chief Scientist at Meta Reality Labs, said Mirror Lake could glimpse what a “complete next-gen display [VR] system could look like,” but also warns that the architecture won’t be conclusively proven (or disproven) until the headset is actually built.

It will be years yet before we see something like Mirror Lake actually reach the market. Meta’s upcoming headset, currently known as Project Cambria, will only include a fraction of the capabilities of Mirror Lake. Even the Holocake 2 prototype—which is more advanced than Cambria—is still several steps behind what Meta is envisioning with Mirror Lake.

Still, Meta CEO Mark Zuckerberg insists that the billions the company is throwing at its XR R&D efforts is not merely academic.

“We’re the company that is the most serious and committed to basically looking at where VR and AR need to be 10 years from now. [We ask] ‘what are the problems that we need to solve’ and just systematically work on every single one of them in order to make progress,” says Zuckerberg. “There’s still a long way to go, but I’m excited to bring all of this tech to our products in the coming years.”