The latest version of NVIDIA’s FCAT VR analysis tool is here and it’s equipped with a wealth of impressive features designed to demystify virtual reality performance on the PC.

NVIDIA has announced a VR specific version of its FCAT (Frame Capture Analysis Tool) at GDC this week which aims to provide accessible access to virtual reality rendering metrics to help enthusiasts and developers demystify VR performance.

Back in the old days of PC gaming, the hardware enthusiast’s world was a simple place ruled by the highest numbers. Benchmarks like 3DMark spat out scores for purchasers of the latest and greatest GPU to wear like a badge of honour. The highest frame rate was the primary measure of gaming performance back then, and most benchmark scores were derived from how quickly a graphics card could chuck out pixels from the framebuffer. However, anyone who has been into PC gaming for any length of time will tell you, this rarely gives you a complete picture of how a game will actually feel when being played. It was and is perfectly possible to have a beast of a gaming rig and for it to perform admirably in benchmarks, but to deliver a substandard user experience when actually playing games.

Over time however, phrases like ‘frame pacing’ and ‘micro stutter’ began creeping into the performance community’s conversations. Enthusiasts started to admit that the consistency of a rendered experience delivered by a set of hardware trumped everything else. The shift in thinking was accompanied (if not driven) by the appearance of new tools and benchmarks which dug a little deeper into the PC performance picture to shed light on how well hardware could deliver that good, consistent experience.

One of those tools was FCAT – short for Frame Capture Analysis Tool. Appearing on the scene in 2013, FCAT aimed to grab snapshots of what the user actually saw on their monitor, measuring frame latency and stuttering caused by dropped frames – outputting that final imagery to captured video with an accompanying stream of rendering metadata right alongside it.

Now, NVIDIA is unveiling what it claims is the product of a further few years of development capturing the underbelly of PC rendering performance. FCAT VR has been officially announced and brings with it a suite of tools which increase its relevancy to a PC gaming landscape now faced with the latest rendering challenge. VR.

What is FCAT VR?

At its heart, FCAT VR is a frametime analysis tool which hooks into the rendering pipeline grabbing performance metrics at a low level. FCAT gathers information on total frametime (time taken by an app to render a frame), dropped frames (where a frame is rendered too slowly) and performance data on how the VR headset’s native reprojection techniques are operating (see below for a short intro on reprojection).

The original FCAT package was a collection of binaries and scripts which provide the tools to capture data from a VR session and convert that data into meaningful capture analysis. However, with FCAT VR, Nvidia have aimed for accessibility and so, the new package is fully wrapped in a GUI. FCAT VR is comprised of three components, the VR Capture tool which hooks into the render pipeline and grabs performance metrics, the VR Analyser tool which takes data from the Capture tool and parses it to form human readable graphs and metrics. The final element is the VR Overlay, which attempts to give a user inside VR a visual reference on application performance from within the headset.

When the FCAT VR Capture tool is fired up, prior to launching a VR game or application, its hooks stand ready to grab performance information. Once FCAT VR is open, benchmarking is activated using a configured hotkey and it then sets to work dumping a stream of metrics to raw data on disk. Once the session is finished, you can then use supplied scripts (or write your own) to extract human readable data and output charts, graphs or anything your stat-loving heart desires. As it’s scripted, it’s highly customisable for both capture and extraction.

So What Does FCAT VR Bring to VR Benchmarking?

So What Does FCAT VR Bring to VR Benchmarking?

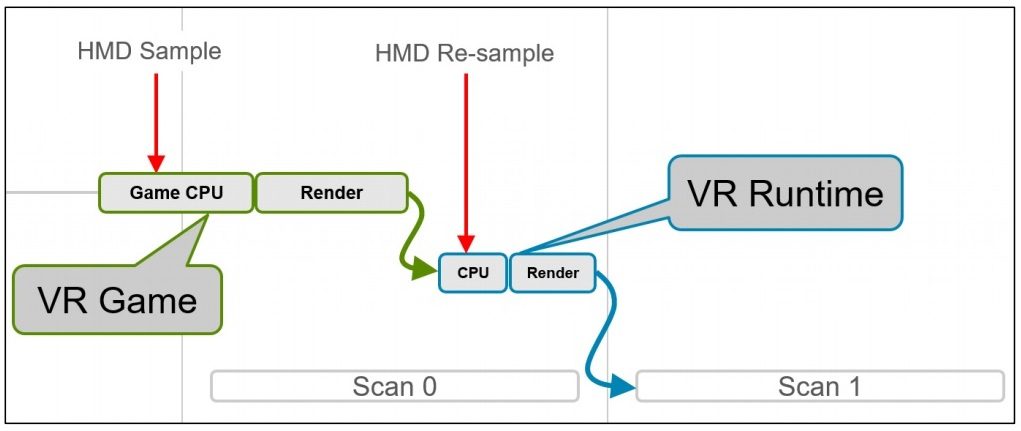

In short, a whole bunch – at least in theory. As you probably know, rendering for virtual reality is a challenging prospect and the main vendors for today’s consumer headsets have had top adopt various special rendering techniques to allow the common or garden gaming PC to deliver the sorts of low latency, high (90FPS) framerate performance required. The systems are designed as backstops when system performance dips below the desired minimum, something which deviates from the ‘perfect world’ scenario for rendering a VR application. The below diagram illustrates a simplified VR rendering pipeline (broadly analogous to all PC VR systems).

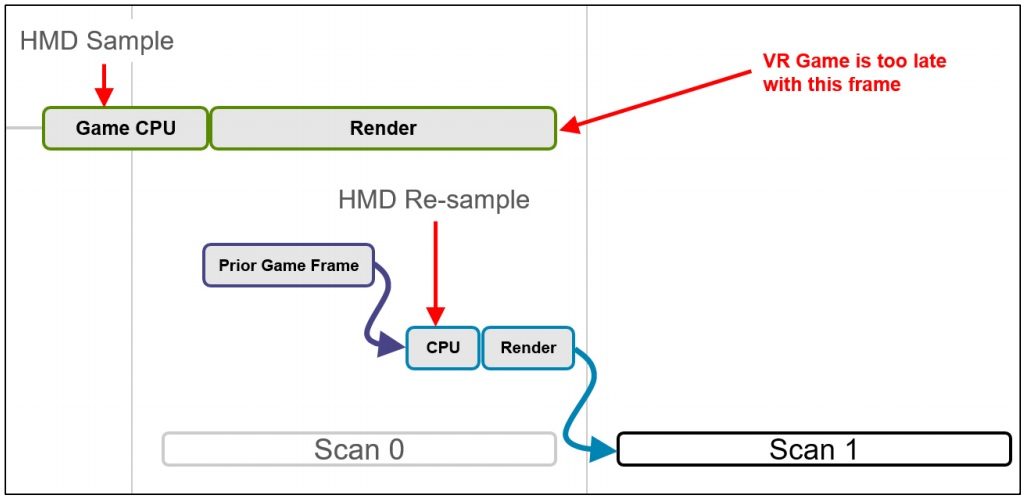

However, given the complexity of the average gaming PC, even the most powerful rigs are prone to performance dips. This may result in the VR application being unable to meet the perfect world scenario above where 90 FPS is delivered without fail every second to the VR headset. Performance dips result in dropped frames, which can in turn result in uncomfortable stuttering when in VR.

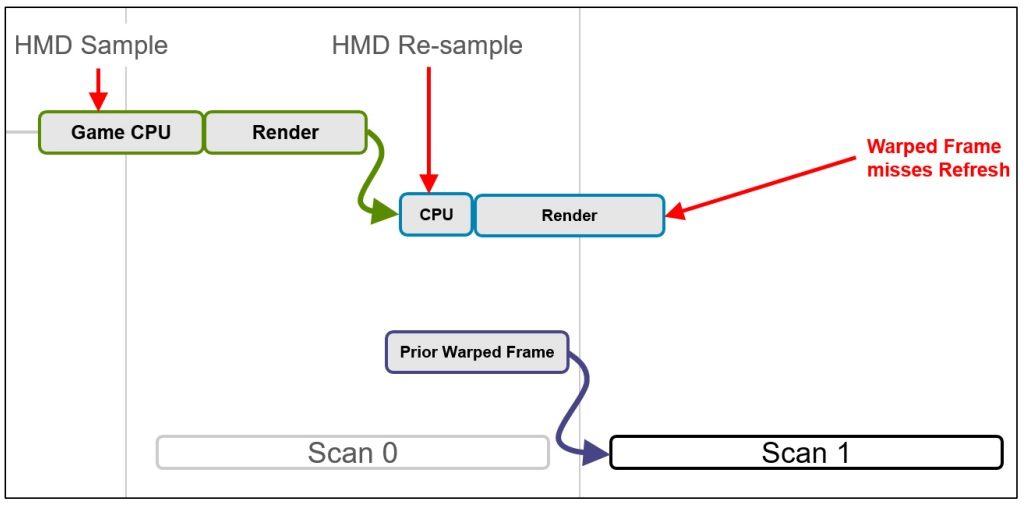

Chief among these techniques are the likes of Asynchronous Time Warp (and now Space Warp) and Reprojection. These are techniques that ensure what the user sees in their VR headset, be that an Oculus Rift or an HTC Vive, matches as closely with that users movements in VR as closely as possible. Data sampled at the last possible moment is used to morph frames to match the latest movement data from the headset to fill in the gaps left by inconsistent or under-performing systems or applications by ‘warping’ (producing synthetic) frames to match. Even then, these techniques can only do so much. Below is an illustration of a ‘Warp Miss’, when neither the application or runtime could provide an up to date frame to the VR headset.

It’s a safety net, but one which has been incredibly important in reducing sensations of nausea caused by the visual disconnect experienced when frames are dropped, with stutter and jerkiness of the image. Oculus in particular are now so confident in their arsenal of reprojection techniques, they lowered their minimum PC’s specifications upon the launch of their proprietary Asynchronous Spacewarp technique. None of these techniques should be (and indeed aren’t designed to be) a silver bullet for poor hardware performance. When all’s said and done though, there’s no substitution for a solid frame rate which matches the VR headset’s display.

It’s a safety net, but one which has been incredibly important in reducing sensations of nausea caused by the visual disconnect experienced when frames are dropped, with stutter and jerkiness of the image. Oculus in particular are now so confident in their arsenal of reprojection techniques, they lowered their minimum PC’s specifications upon the launch of their proprietary Asynchronous Spacewarp technique. None of these techniques should be (and indeed aren’t designed to be) a silver bullet for poor hardware performance. When all’s said and done though, there’s no substitution for a solid frame rate which matches the VR headset’s display.

Either way, these are techniques implemented at a low level and are largely transparent to any application which is sat at the head of the rendering chain. Therefore, metrics gathered from the driver which measure when performance is dipping and when these optimisations are employed are vital to understand how well a system is running. This is where FCAT VR comes in. Nvidia summarises the new tool’s capabilities as below (although there is a lot more under the hood we can’t go into here):

Frame Time — Since FCAT VR provides detailed timing, it’s possible to measure the time it takes to render each frame. The lower the frame time, the more likely it is that the app will maintain a frame rate of 90 frames per second needed for a quality VR experience. Measurement of frame time also allows an understanding of the PC’s performance headroom above the 90 fps VSync cap employed by VR headsets.

Dropped Frames — Whenever the frame rendered by the VR game arrives too late for the headset to display, a frame drop occurs. It causes the game to stutter and increases the perceived latency which can result in discomfort.

Warp Misses — A warp miss occurs whenever the runtime fails to produce a new frame (or a re-projected frame) in the current refresh interval. The user experiences this miss as a significant stutter.

Synthesized Frames — Asynchronous Spacewarp (ASW) is a process that applies animation detection from previously rendered frames to synthesize a new, predicted frame. If FCAT VR detects a lot of ASW frames, we know a system is struggling to keep up with the demands of the game. A synthesized frame is better than a dropped frame, but isn’t as good as a rendered frame.

What Does This All Mean?

In short, and for the first time, enthusiasts will have the ability not only to gauge high level performance of their VR system, but crucially the ability to dive down into metrics specific to each technology. We can now analyse how active and how effective each platform’s reprojection techniques are across different applications and hardware configurations. For example, how effective is Oculus’ proprietary Asynchronous Time Warp when compared with Open VR’s asynchronous reprojection? It can also provide system enthusiasts alike vital information to pinpoint where issues may lie, or perhaps a developer key pointers on where their application could use some performance nips and tucks.

All that said, we’re still playing with the latest FCAT VR package to fully gauge the scope of information it provides and how successfully its present (or indeed how useful the information is). Nevertheless, there’s no doubt that FCAT‘s latest incarnation delivers the most comprehensive suite of tools to measure VR performance we’ve yet seen, and goes a long way to finally demystifying what is going on deeper in the rendering pipeline. We look forward to digging in a little deeper with FCAT VR and we’ll report back around the tool’s planned release in mid March.