During its presentation at Unite Berlin, Magic Leap gave attendees a crash course in developing experiences for Magic Leap One (ML1), we found out quite a bit more about how the device works and what we can expect to experience with the device.

Alessia Laidacker, interaction director, and Brian Schwab, director of the Interaction Lab, led the session, which served to teach developers how to approach creating content for the new device and operating system.

Here's what we learned...

More Content Partners Revealed

In recent months, Magic Leap has disclosed its work with Sigur Ros and the NBA. But during the Unite Berlin event, two new content partners were revealed.

Meow Wolf, which specializes in turning art galleries into augmented reality experiences, will bring its talents to Magic Leap, as will game developer Funomena, a game developer that's working on some "outside of the box" content (not necessarily games) for the device.

Speaking of the NBA, during the Q&A session, Schwab clarified Shaquille O'Neal's description of his ML1 experience from the Recode Code Conference in February.

The experience consisted of a virtual screen, allowing Shaq to watch an NBA game, with 3D assets on his tabletop. Shaq was also able to interact with those elements to extract in-game information while the screen floated in space in front of him.

Field of View Will Be Limited, But Not a Problem

Schwab addressed the elephant in the room: that ML1's field of view will be limited, without revealing specifics. However, he stressed that, knowing the confines of the field of view issue, developers should prepare to adjust for it with a "less is more" approach.

He also noted that filling up the user's field of view can distract from the overall immersive experience and may bring attention to the limits of the screen, causing users to treat the content more like watching TV, rather than experiencing an extension of the real world. And keeping people in the real world is Magic Leap's objective with immersive content designed for the device.

"The real world is a primary actor in whatever experience that you're making. By using less pixels, each pixel is much more magical," said Schwab. "If I have a whole slew of things out in front of me, I'm more like watching a bunch of pixels. If I'm more watching the real world, then something magical comes in front of me [then] there are more real-world cues, which makes that one pixel that much more magical. If everything is pixels, then nothing is pixels."

To compensate for the limited field of view, developers can use tactics, such as motion in the periphery or spatialized audio cues, to draw the user's gaze in the right direction.

ML1 Will Track User Experience Data

While Magic Leap has previously mentioned that head pose, hand gestures, eye tracking, and voice commands will all play a part in the ML1 user interface, the Unite Berlin presentation did reveal a fifth element that developers will have at their disposal: geo/temporal information from user interactions.

"We're actually aggregating some of this information over time so that we can tell you trends, both personally as well as [for] multiple users that are in an area," said Schwab. "We have access to new streams of information that give you back experiential power through better user context."

When applied to eye tracking, developers can use this information to cue interactive content to draw users' attention back to the action if their gaze strays to other content.

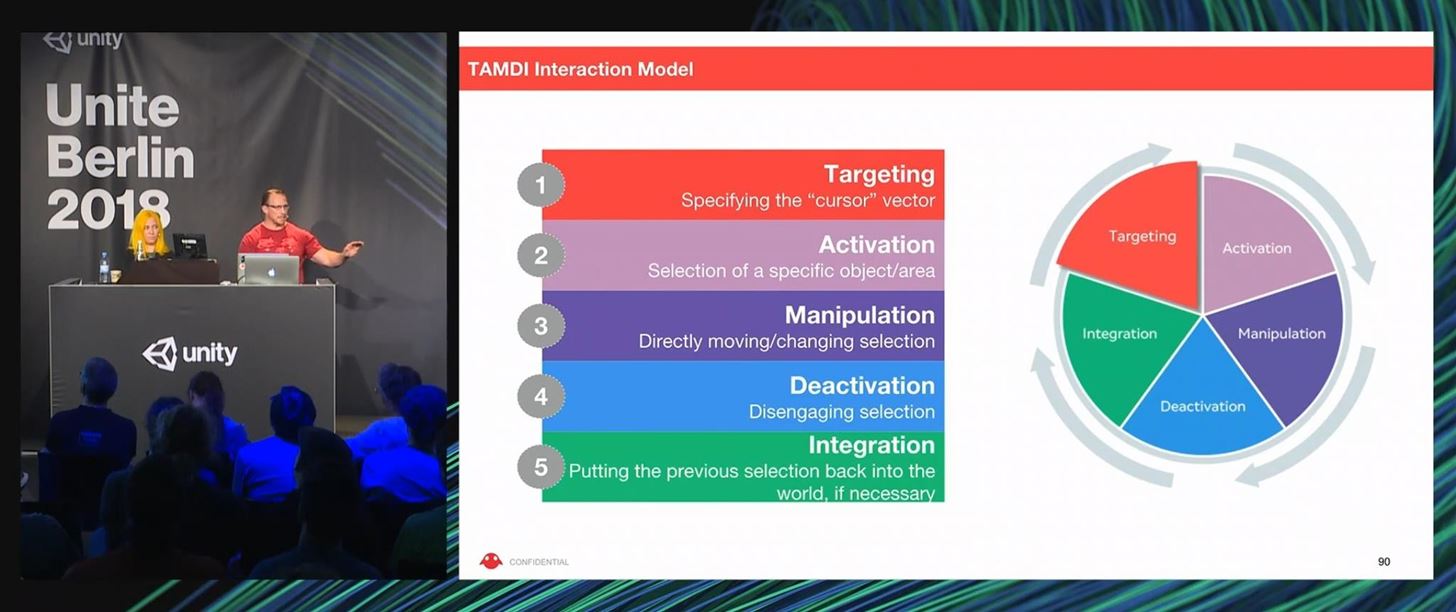

Transmodal Interaction Model Merges Various User Inputs to Determine Action

Speaking of user input, Magic Leap has developed its own transmodal interaction model that combines the position and movement of the head, eyes, and hand within the context of an action to identify target objects or focus areas. It's called TAMDI, or target, acquisition, manipulation, deactivation, and integration, which represents a cyclical process by which the developers can measure user input and return the correct interactive result.

As a hypothetical, let's say I'm playing a game of three-card monte in ML1. Using the transmodal interaction model, the app can tell by my the position of my eyes, head, and the positioning of my hand that I'm selecting (or even picking up) the middle card.

Moreover, the app can track my eyes and head to track that I'm actually following the cards and not just making a lucky guess.

Magic Kit Is Magic Leap's AR Toolkit

Earlier this year, we reported that Magic Leap had trademarked the term "Magic Kit." Now, we know more about what that name means.

Magic Kit is a toolkit, not unlike ARKit, ARCore, or Microsoft's Mixed Reality Toolkit, that helps developers tap into the ML1's capabilities, such as interacting with the mapped environment, and the full array of user interaction methods.

Environment Toolkit, a plugin that will come with Magic Kit, will help developers account for obstacles and objects in a space and define how content will interact and navigate the space. Environment Toolkit also gives developers tools for identifying seating locations, hiding spots, and room corners so that content will behave in a contextual manner that enhances the overall sense of immersion.

The company's Interaction Lab will be distributing a package of examples and source code for Magic Kit to assist developers in taking advantage of the ML1's overall feature set.

Spatial Mapping Takes a Different Path Compared to HoloLens

Magic Leap is introducing a new concept in environment mapping with BlockMesh, a mesh type that's available in the MLSpatialMapper prefab in the Lumin SDK for Unity.

"BlockMesh spatially subdivides the real world into a set of cubic blocks, axis aligned with the coordinate system origin of the current head tracking map," explained Laidacker from the stage and via a graphic during the presentation.

"The mesh blocks are generated from the internal reconstruction model so that whatever geometry is inside these cubical regions in the reconstruction, is represented as a connected triangle mesh. Although mesh blocks are a connected triangle mesh internally, the meshes between blocks are not connected. This enables easy and fast updating of regions of the mesh when changes occur in the environment."

Gesture Recognition Also Differs From HoloLens Approach

During the section on gestures, Laidacker provided some insight into how ML1 recognizes the eight gestures available for user input. When developers enable gestures, ML1 takes head pose into account and toggles the depth sensor to scan for the near-range field instead of further out, where ML1 scans for the environment.

By contrast, Microsoft built custom silicon that automatically handles the transition. The holographic processing unit (HPU) in the HoloLens automatically detects hands, switches to close range when a hand is detected, and then reverts to far range when the hand disappears.

The Controller Is Here to Stay...For Now

During the Q&A session, when asked if the controller is here to stay, Schwab affirmed that it is, at least for the near future.

Although he believes ML1 has a strong set of gestures, and the team is working to improve them and add to the menu of options, a controller is a recognized paradigm of input that is comfortable to users, and a better input method in some cases, such as input that requires high precision, typing, or controlling content outside of the field of view. The controller also serves to deliver a better feedback mechanism with its haptic motor.

"One of the reasons that the controller is here to stay is that it gives you a chunk of haptic feedback directly in those nerves," said Schwab. " It is also a much higher fidelity track for now."

Anyone who has used HoloLens gestures to try to precisely place, scale, or align objects in 3D space can relate to wanting a higher precision and lower latency input mechanism for certain tasks, even if it takes a bit away from the magic of hand gesture control.

However, Laidacker added, the mix of controller input and hand gestures (or the omission of one input or the other) is up to the developer's discretion. The duo believes that many developers may prefer hand gestures as a more natural interaction method, particularly for experiences serving non-gamers, a section of the consumer market largely unfamiliar with handheld, gaming-style controller dynamics.

There was a lot to digest in the presentation, but those were the biggest reveals. If you crave more, we've embedded the video of the entire presentation (which starts at the three hour, 45 minute mark) below for your own edification.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

1 Comment

Great job, as usual, explaining all this insider baseball jargon.

Share Your Thoughts