We’ve seen some incredible things emerging from Leap Motion since the company hired visionary filmmaker Keiichi Matsuda last year as a vice president leading a new London research studio.

Most recently, a video emerged from the company showing how a desk can become interactive when paired with the company’s finger and hand tracking technology, as well as an AR headset design called North Star the company is open-sourcing.

The video shows the nuanced way digital objects can slip into the real world, and how you might interact with these objects using natural gestures. In case you are unfamiliar, Matsuda created the impactful 2016 short film Hyper-Reality which imagined a future overtaken by AR.

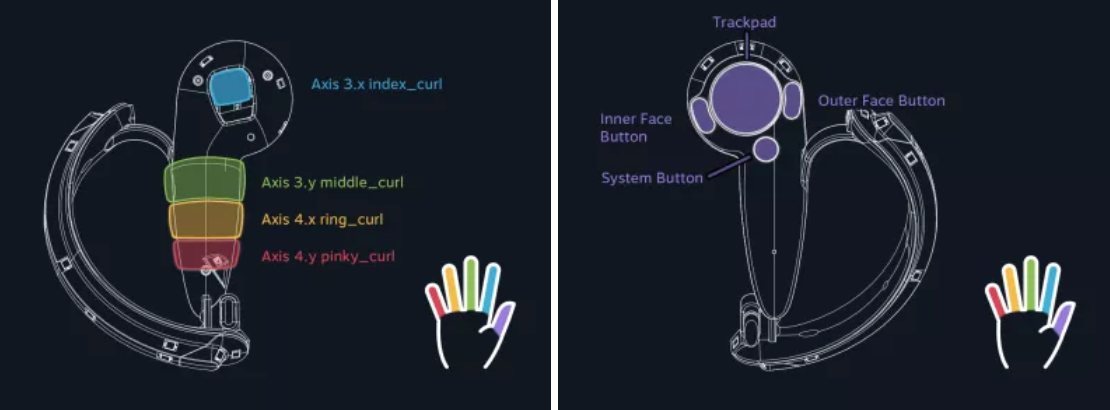

While the videos Leap Motion produced in recent weeks show off some interesting concepts for AR software design, I wonder how far this interaction paradigm can go without offering haptic feedback to users. Valve, for instance, is developing controllers which strap to your hand for realistic grasp and release sensations, and these “Knuckles” controllers can track your finger movements with a fair degree of accuracy too. If you’ve ever held a bow in VR, you know how satisfying it can feel to pull back a bow string with even simple vibration feedback. Adding more realistic feelings of grasping the bow and pinching the arrow may make it feel even more immersive.

So how does Leap Motion’s technology fit into the AR/VR market? The first generation consumer VR headsets all rely on physical controllers with which to interact. Meanwhile, the first consumer AR headsets have been limited in terms of displays and tracking. HoloLens, for instance, only recognizes the simplest of gestures while being priced way higher than current VR systems. How far can interactions go without highly sensitive physical buttons to press?

Here’s Matsuda’s answer to that question:

For some experiences, total immersion is a clear goal; simulations of physical experiences, gaming, etc. These all improve when we engage more senses. I believe that those cases will be niche, and that AR and VR will have to make do with sound and vision for the foreseeable future. This general, everyday mixed reality will much more subtle but no less profound in its impact. Our physical world will be populated with virtual materials that can’t be touched, smelt or tasted, but nevertheless have their own behaviours and properties, and exist as a legitimate part of our environments. Just as the invention of glass transformed our built environment, making new architectures possible, these new virtual materials will again change the way that we design and use space.

Overall, the point seems to be that carrying controllers around is likely a “niche” scenario when VR/AR technology is to the point of becoming part of our daily lives. I also asked Leap Motion whether its technology might be paired up with a controller and a spokesperson wrote “although it would be a nontrivial challenge to make it feel natural, it is an avenue we may explore in the future.”