Vivid Vision thinks so, and they want it to help millions of people. Formerly known as Diplopia, they believe that VR can help treat common vision problems like lazy eye and cross-eye, which happen when the brain ignores input from the weaker eye. Their solution – a VR experience that combines medical research with gameplay mechanics – is now rolling out to eye clinics around the USA.

Recently, we caught up with Vivid Vision co-founder James Blaha to ask him how he’s retraining people’s brains using VR and hand tracking technology. You can also see James later today at 5pm PT on our Twitch channel, where he’ll be demoing Vivid Vision live and taking your questions.

Every tech startup wants to change the world, but it’s not often that they want to change people’s brains. What does that mean for you?

How you perceive the world is a very personal thing. I was never sure what 3D vision was supposed to look like, which left me wondering what I might be missing out on. We’re trying to improve how people perceive the world around them so they can do the things they love.

What are the challenges in bringing “flow” and fun gameplay to a medical application?

It’s very challenging to balance the requirements of the training with fun game mechanics. We think that making the game fun, and getting people into “flow” where they are just reacting and enjoying themselves is critical to the success of the training. We design every game by starting with the relevant vision science first, and try to incorporate game mechanics that fit well with the visual tasks that need to be completed.

What’s the role of hand controls in the game? What does it offer players?

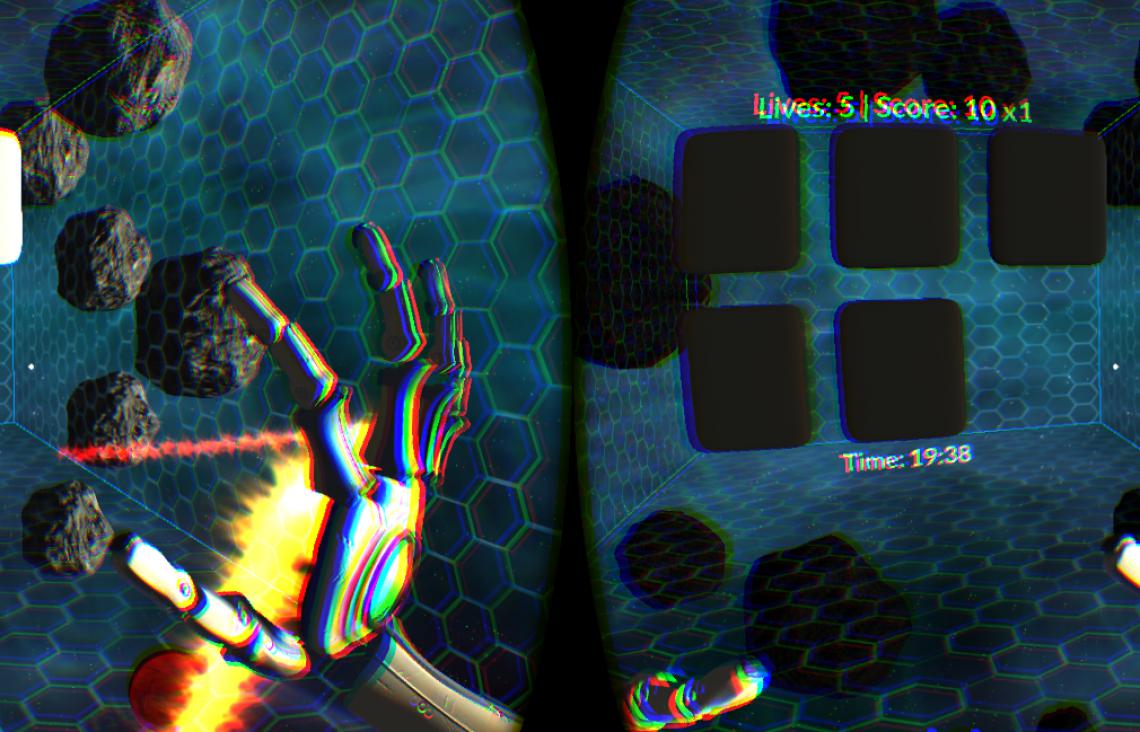

Hand-eye coordination tasks are very important when it comes to usefully applying depth perception. There is some evidence that having people do motor tasks during perceptual learning increases the rate of learning. Disparity cues are largest and most useful at closer distances.

We don’t want to just increase a person’s ability to score well on an eye test, we would like their improved vision to translate into their daily life. Using hand tracking, we can force people to judge depth with both eyes and reach out to exactly the distance they need to. We can have people learning to catch and throw naturally.

The second part of this is that most of the people using our software don’t play games at all. The hand tracking is easier for non-gamers to pick up than controllers or mouse and keyboard.

Normally, audio is an essential part of making compelling gameplay. What’s it like to design a game where you can’t provide auditory cues to help people achieve tasks?

We do have to be careful with how we design the audio. We have to put a lot of work into hiding depth cues and on delivering certain parts of the game to only one eye or the other. The brain is very good at working around any deficits in sensory input. Blind people can learn to use echolocation to navigate. This means that we have to be careful on how we use sound so that we aren’t giving people enough information to continue to ignore their weaker eye.

What’s your plan for bringing Vivid Vision to as many people as possible?

Vivid Vision is now available in select eye clinics nationwide. You can visit our website to find a clinic in your area. We are also still planning on releasing a game for home use when the Oculus Rift CV1 comes out.

What’s involved with your current medical study, and what do you hope to discover?

We’re well underway on the study in collaboration with UCSF. Right now, we’re looking for patients in the San Francisco Bay Area to participate. (If you’re interested, you can contact us through our website or email contact@seevividly.com). We want to know exactly how effective our software is for different age groups with different kinds of lazy eye, what the optimal training regime is, and which techniques are the most effective for the different types of lazy eye.

What will be the most unexpected way that VR transforms the way we live, think, and experience the world?

I see VR as a way to finely and accurately control sensory input to the brain. Right now, VR is just visual and auditory input, but I think it will expand to the other senses as well in the coming years. Combined with good sensors, VR is a platform to provide stimulus to the brain and measure how it responds. By studying this feedback loop, we can start to design stimulus that changes how the brain works, to improve function. The more powerful the technology gets, the more powerful a platform it is for rewiring ourselves.

[…] une interview donnée au site Leap Motion, il a donné des détails sur le processus de développement de ces […]

January 15, 2016 at 9:46 am