Military contractor, Engineering & Computer Simulations (ECS), has received a grant from the federal Small Business Innovation Research program—likely ranging from $500,000 to $1.5 million—to pilot a virtual reality training program for U.S. Army medics.

Founded in 1997, ECS is a Florida-based contractor which builds digital training and other tech solutions for the U.S. military.

The company today announced that it has received a ‘Phase II’ grant from the federal Small Business Innovation Research (SBIR) program, an annual fund of more than $3 billion which aims to support private companies in developing new technologies that both fit federal needs and show potential for commercialization.

The grant is to support the development of a virtual reality training program for U.S. Army medics under the umbrella of the Army’s ‘Synthetic Training Environment System’ program (which employs digital training of all sorts).

While ECS didn’t announce the amount of the grant, SBIR documents say that most Phase II grants range from $500,000 to $1.5 million. The company skipped a Phase I grant, which is smaller and focused on the initial concept, while Phase II focuses on building a functional prototype.

ECS says its VR medic training program is designed to integrate with the Army’s existing Tactical Casualty Combat Care procedures.

The VR training program will include “multi-player integration, instructor dashboard and analytics, STE integration, and a training effectiveness evaluation,” the company says.

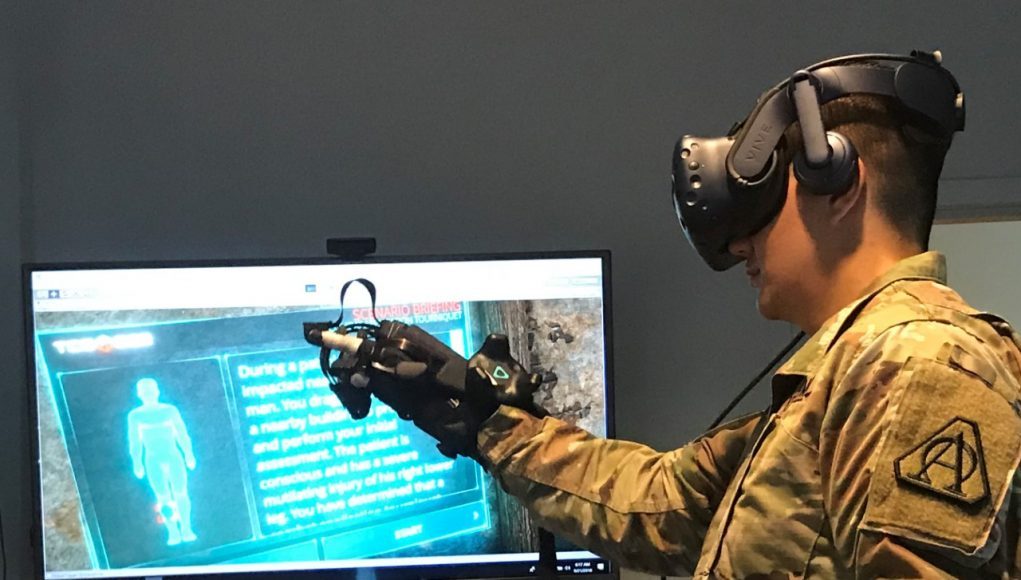

ECS plans to partner with the Mayo Clinic to guide the medical aspects of the system and with HaptX to employ the use of the company’s advanced haptic gloves to increase the realism of the VR training program.

HaptX—which makes perhaps the most advanced haptic gloves presently available—has been positioning its product toward virtual reality training and other non-consumer applications. In late 2019 the company announced that it raised $12 million in funding to continue development of its gloves.

Bulky but impressive, the HaptX gloves offer both force-feedback and detailed haptics. Together, the glove can both lock the wearer’s hand in a position which simulates the feedback provided by a physical object and create convincing sensations of touch with an array of micro-pneumatic haptics across the palm and fingers. We most recently got to try the HaptX gloves in 2018.

ECS hopes that using the gloves will make its VR training program more effective by enhancing the realism of the simulation and allowing trainees to feel the sensations of holding virtual tools and interacting with patients.

For now the project is in the pilot phase, but if proven effective it could be rolled out widely for Army medic training in the future.