Hands-on Vive Focus Plus Hand Tracking SDK

I have just tried the hands tracking solution offered on the Vive Focus Plus by the Vive Hands Tracking SDK and I want to tell you what has been my experience with it and how it does compare with other hands tracking technologies, like the ones offered by Facebook or UltraLeap.

It’s a long time that I don’t write an article about the Vive Focus Plus, and it has been interesting exploring what’s happening on the Vive Wave ecosystem again. While the spotlight is all on Oculus and its Quest, actually Pico and HTC are evolving their hardware and software offerings without almost no one talking about it. I think it could be great if the community could be informed more about all the devices that are out there.

Vive Hand Tracking SDK video review

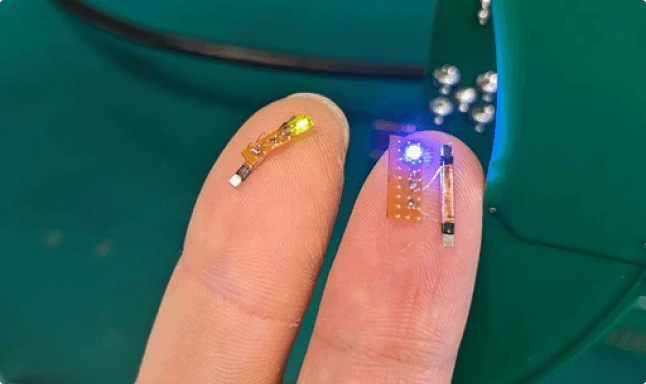

I’ve made a nice Youtube video with me saying random stuff and exploring the Vive Hand Tracking SDK at first in Unity and then in action using my hands in VR. You can watch it here:

For the usual textual review, please, keep on reading.

Vive Hand Tracking SDK

HTC is working on its own hand tracking solution since a while. The Vive Hand Tracking SDK, as it is called, has always been compatible with all Vive devices. But while the PC VR plugin always tracked many skeleton points of the hands, originally the mobile version was only able to detect some basic gestures. HTC announced that it was going to make the joints tracking also mobile-compatible, but when I visited the headquarters of the company in Taiwan, people there told me that full-hand tracking was more probable for the next-generation of devices.

Some days ago, my friend and ergonomic expert Rob Cole (yes, the one that tweaked the Valve Index) pointed me to a post on Reddit where someone was using the Vive Hand Tracking SDK on the Valve Index.

Checking its dedicated documentation again after more than one year, I’ve noticed that now the library has reached the 0.1.0 version and it is not only fully compatible with PC headsets, but also with mobile ones. This means that actually on the current Vive Focus Plus, it is already possible to have full-skeleton hands tracking. Even more, the SDK is also compatible with mobile phones, making it one of the most cross-device hands tracking solutions on the market.

Curious about its performances, I have decided to give it a go.

Vive Hand Tracking Unity SDK

I’ve been very pleasantly surprised by how the Vive Hand Tracking SDK has been designed. I’ve not always been a huge fan of the structure of Vive SDKs, but I have to say that the company is improving a lot in this sense. I think the SDK, from a developer’s point of view, is really nice.

First of all, as I’ve said, it is compatible with many devices: PC VR, standalone VR, and mobile phones. In the standalone realm, it is compatible with all Vive Wave-compatible devices, and this includes HMDs from HTC, Pico, iQiYi, Shadow Creator, and many more. It is admirable how this SDK has this wide compatibility, that is pretty unique in its field.

Then, another feature I loved is that it has been built in a layered manner, and it is customizable as you wish. What I mean is that the SDK is composed of two layers: a hands-tracking engine, and APIs and facilities that use the data from the tracking engine to provide hand-based input. So, while the first one works in detecting the position and the orientation of the various joints of the hands starting from the camera images, the second one uses this information to extract higher-level data, like for instance gesture detection or scripts that let you easily use a hand pose to trigger something in your XR application. The nice stuff is that these two things are separated, and the Vive SDK lets you change the lower layer while keeping the upper one unchanged. So you can use the hands-tracking engine that you want (even one provided by you) and attach it to the high-level hands input API provided by Vive, and your application should continue to work in the same way. You can use a different tracking engine, but by leaving all the source code untouched. This is very versatile.

This means that you could substitute the tracking engine also with a hands simulator, and this is exactly what Vive already provides in the SDK: when testing hands-tracked apps in Unity, you can use the “simulator engine” to simulate hands input. It gives you many buttons through which you can simulate the pose of the hands in your application (e.g. hand open, “like” gesture, etc…) so that you can test and debug your application in the editor before actually building and deploying it. It is very handy for us developers.

The SDK has been updated since the last time I used it, and now it is finally compatible with Unity XR engine, and Unity XR Plugin Management.

These are the features that I have appreciated the most. For the rest, you can find there the usual things that you can expect from a hand tracking SDK: the possibility of getting low-level data of the hands (the pose and the confidence of the tracked joints), or getting high-level gestures (like, ok, five, fist, etc…), and also the ability to animate some rigged 3D models of hands. There is also a satisfactory documentation and some samples to learn from.

My experience with the SDK inside Unity has been very positive.

Hand tracking performance on the Vive Focus Plus

So, after having played around with the SDK in Unity, I built one of the samples, donned my Vive Focus Plus, and verified how hands tracking actually performs inside the headset. And well, I have not good news for you.

The first thing I noticed is that the tracking is very slow. For sure it doesn’t run at 75Hz, but maybe at 10 or something like that. It seems that current hardware can’t cope with the computations required to perform reliable tracking. And this slowness seems also to hurt tracking performances.

My hands got lost many times during my tests, even when they were straight in front of my face, so in an ideal position. I’ve noticed that the hands get detected well in the poses that are similar to the ones of popular gestures (ok, fist, five, etc…), but in other poses, the finger tracking is not precise and there are many misdetections. The tracking only works when the two hands are separated, and if you make the two hands touch, at least one loses the tracking. Also, since the Focus has just two front cameras, the tracking FOV is not that wide, and the more the hands are distant from the center of vision, the worse they are tracked.

The overall performances are mediocre, and I found the system somewhat usable if you have to work just with gestures, but not reliable for full-hand skeleton usage.

The best hands tracking solution I have ever tried is still the one offered by Ultraleap, followed by the one of the Oculus Quest.

Final Thoughts

The hand tracking on the Vive Focus Plus is not in a usable stage at the moment: it is good to experiment and to get used to the technology, but it’s not employable in a commercial product.

But remember that this Hand Tracking SDK is marked as beta, and its version is still 0.1, so I wouldn’t be too harsh towards it. Thinking about what I have been told some years ago, probably HTC is keeping improving its SDK for its upcoming new standalone device. Featuring improved horsepower and probably the Snapdragon XR2 chipset that adds hardware acceleration for various XR tasks (especially AI ones), it could probably run hands tracking at the required framerate and with improved accuracy. Remember that it is difficult to have accurate tracking if the framerate is low (unless you perform tracking by detection, but it would be crazy to do that on a VR headset), so more performances could also lead to increased accuracy.

That would be a very positive thing: the Vive Hand Tracking SDK has already been conceived very well, and I appreciated a lot its modularity and customizability. It’s also great that it can work on many devices. If it could be matched with a working tracking engine, that could be a great tool for us XR developers. In this sense, the efforts by HTC in keeping improving their Hand Tracking Unity SDK in preparation for a new device would have sense.

So, let’s hope for a brighter future for this solution. I think that in some months we’ll discover the true potential of this system, for the good or for the bad.

And that’s it for this review! What do you think of this new article on the Focus+? Are you interested in the hands tracking on Vive devices? Let me know whatever thought comes to your mind in the comments here below!

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.