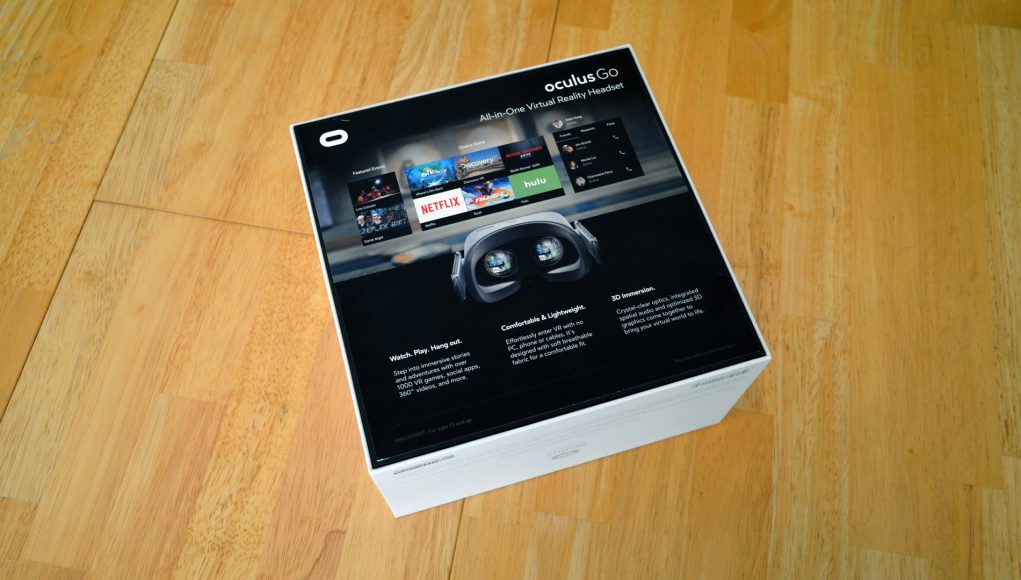

The Oculus Go was released on Tuesday, May 1st at the Facebook F8 developer conference, and it is a self-contained, 3-DoF mobile VR headset priced at $200 that is optimized for media consumption and social VR interactions. Facebook showed off four first-party applications including Oculus TV, Oculus Gallery, Oculus Rooms, and Oculus Venues. The Oculus Venues will be treated as public spaces that will be governed by Oculus’ updated Terms of Service that has a code of conduct to ensure safe online spaces. In order to enforce the code of conduct, then Oculus will need to do some amount of capturing and recording of what happens in these virtual spaces, which has a number of privacy implications and tradeoffs between cultivating safe online spaces that may erode aspects of the freedom of speech and the 4th amendment rights to privacy within these virtual spaces.

The Oculus Go was released on Tuesday, May 1st at the Facebook F8 developer conference, and it is a self-contained, 3-DoF mobile VR headset priced at $200 that is optimized for media consumption and social VR interactions. Facebook showed off four first-party applications including Oculus TV, Oculus Gallery, Oculus Rooms, and Oculus Venues. The Oculus Venues will be treated as public spaces that will be governed by Oculus’ updated Terms of Service that has a code of conduct to ensure safe online spaces. In order to enforce the code of conduct, then Oculus will need to do some amount of capturing and recording of what happens in these virtual spaces, which has a number of privacy implications and tradeoffs between cultivating safe online spaces that may erode aspects of the freedom of speech and the 4th amendment rights to privacy within these virtual spaces.

LISTEN TO THIS EPISODE OF THE VOICES OF VR PODCAST

Editor’s Note: We’re catching up on publishing a small backlog of Voices of VR episodes. This episode was recorded around the time of the Oculus Go launch back in May, but includes broader discussion about privacy in VR that remains relevant today (and well into the future).

Facebook announced at F8 that it is planning on moderating other Facebook networks through AI moderation, and so it’s likely that Oculus will also eventually try to moderate virtual spaces with AI. What will it mean to have our public virtual interactions mediated by AI overlords? This brings up questions about the limits and capabilities of supervised machine learning to technologically engineer cultural behaviors. The Cleaners documentary at Sundance went behind the scenes of human content moderators of Facebook to demonstrate how subjective the enforcement of terms of service policies can be leaving avant-guard artists susceptible to false positive censorship that results in permanent bans from these communication platforms with no appeals processes. How will AI solve a problem where it’s impossible to define objective definitions of free speech that spans the full spectrum of artistic expression to terrorist propaganda? So Facebook is becoming larger than any single government, but they don’t have the same levels of democratic accountability through democratic models of virtual governance or appeals processes for bans.

So while Oculus Go is an amazing technological achievement of hardware, software, and user experience, there are some larger open questions about the role of Facebook and what will happen to our data on this platform. What is their plan for virtual governance? How will they deal with the long-term implications of bans? What does the appeals process look like for false positives of code of conduct violations? What data are being recorded? How will Facebook notify users when they start recording new data or change data recording policies? What data will be sent from Oculus to Facebook? Why don’t these online spaces have peer-to-peer encryption? Does Facebook want to eventually listen into all of our virtual conversations? How will Facebook navigate the balance between free speech and the desires of governments to control speech?

Oculus’ privacy policy is incredibly open-ended, and without a real-time database of what data are recorded, then there is no accountability for users that provides full transparency as to what is being captured and recorded across these different contexts. I received some specific answers to some of these questions in this episode as well as in episode #641 in talking with Oculus’ chief privacy architect, but the privacy policy affords Oculus/Facebook to change what is recorded and where at any moment.

At F8, I had a chance to talk with Oculus Go product manager Madhu Muthukumar about the primary use cases & hardware features of the Oculus Go, but also some of the larger questions about privacy, free speech, virtual governance. Facebook/Oculus seem to be taking an iterative approach to these questions, but they also tend to be very reactive to problems rather than proactively thinking through the long-term philosophical implications of their technologies where they are proactively taking preventative measures.

Just arrived to #F8.

I'll be covering all of the VR/AR news from Facebook/Oculus.

I'll be roaming around the next couple of days covering the VR/AR sessions, doing interviews, and I have a demo at 1p.

The keynote starts at 10a & will be livestreamed here: https://t.co/dKhR5NaVSb pic.twitter.com/zYJx23Q07l— Kent Bye VoicesOfVR (@kentbye) May 1, 2018

Facebook is dealing with a lot of trust issues, and their message at F8 was that they’re 100% dedicated to building technologies that connect people despite the risks. Technology can always be abused, but that shouldn’t scare us into not building solutions because Facebook sees that on the whole that they are doing more good than bad. The problem is that Facebook is siphoning our private data, eroding privacy, and their quantified world of social relationships has arguably weakened intimate connections in favor of inauthentic interactions. Facebook claims to want to cultivate community, but they fail to connect the dots for how their behaviors around privacy have eroded trust, intimacy, and will continue to weaken the community and connection they claim to be all about. At the end of the day, Facebook is a performance-based marketing company using the mechanism of surveillance capitalism, and ultimately these financial incentives is what is driving their success and behaviors.

Virtual reality has the potential to move away from the tyranny of abstractions inherent in communicating through the written word, but Oculus has not promised peer-to-peer encryption to ensure that social interactions in VR have the same level of privacy as personal conversations in real life. The are leaving that door open which sends me the message that they like to listen in to everything we say or do in VR. Is that the world that we really want to create? Jaron Larnier argues that it absolutely isn’t. At TED this year, Lanier said, “We cannot have a society in which, if two people wish to communicate, the only way that can happen is if it’s financed by a third person who wishes to manipulate them.”

Support Voices of VR

- Subscribe on iTunes

- Donate to the Voices of VR Podcast Patreon

Music: Fatality & Summer Trip