This time last year, computer vision company uSens introduced a stereo camera module capable of hand tracking. Now, uSens can achieve the same thing with just a smartphone's camera.

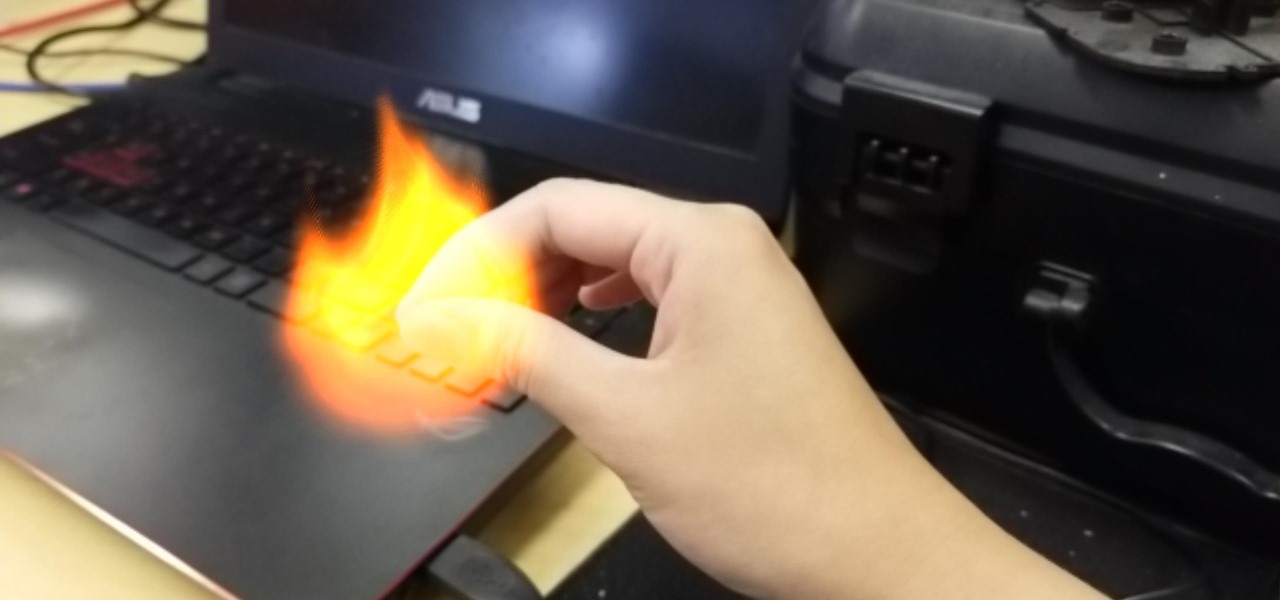

On Thursday, at the Augmented World Expo in Santa Clara, uSens unveiled the beta version of its uSensAR Hand Tracking SDK, which enables developers to integrate hand tracking and 3D motion recognition for augmented and virtual reality mobile apps on iOS and Android devices. Using a smartphone's RGB camera, uSensAR Hand Tracking uses computer vision and deep learning to track the full hand's skeletal dynamic, rather than just a hand's fingertips.

So far, mainstream adoption of augmented reality has been the province of the smartphone, thus, any major interface innovation in the way of AR on smartphones is a significant development. While Apple and Google have brought markerless tracking of horizontal and vertical surfaces, image recognition, and even multi-user experiences to mobile apps via ARKit and ARCore, interactions with AR content have been limited to touchscreen interfaces rather than the hand gesture interfaces of devices like the HoloLens and Meta 2.

"uSens is proud to push AR to the next frontier, by enabling developers to create engaging, enjoyable and entertaining augmented reality experiences made more intuitive, for the smartphone user—by simply moving their hands and fingers in the air," said Anli He, the co-founder and CEO of uSens, via a statement.

"This opens a whole new world of possibilities for developers, enabling them to create a truly one-of-kind experience for a mainstream audience," said He. "Similar to how touchscreens enabled even the most technologically challenged to embrace smartphones, providing an easy and natural way for users to engage with AR/VR objects and environments will play a major role in boosting consumer adoption."

So what has changed over the past year that uSens can accomplish the same level of tracking without external hardware? The key is machine learning.

"We have been working on phone RGB camera-based hand tracking since we started to target the phone market directly in the middle of last year. It is similar to our algorithm for our deep learning based technology; the difference is now, we train the algorithm to learn single camera input," said Yaming Wang, VP of product and operation at uSens, in a statement to Next Reality.

"It can provide skeleton information and joints based on our algorithm, though its performance will not be as good as stereo or depth camera since its FoV is much smaller. But we believe it provides good enough performance for many use cases."

According to a Wang, uSens is already working with Chinese social app Meitu to integrate the technology into its AR camera effects as well as an undisclosed game studio, though Wang hints that the company is publicly traded on Nasdaq and publishes "networked games in a social setting."

Nevertheless, although mobile AR has accelerated the adoption of AR among consumers, the touchscreen still represents a barrier between the user and the content. Just browsing through the best mobile AR apps available today offers a few exciting ideas as to how hand tracking could improve already great experiences.

Games like PuzzlAR, which already employs one of the more unique mobile AR interfaces by integrating a gaze cursor with touchscreen gestures, could be even more immersive if players could manipulate puzzle pieces in space with their hands. Making lines in Just a Line would be more more intuitive with fingers as the drawing implement rather than the smartphone. And an educational app like Froggipedia could much more closely emulate the experience of dissecting frog without the mess.

Of course, with smartphones, one hand is essentially tied behind the user's back, as something needs to hold the device up. As such, uSens technology could also facilitate swifter innovation in smartglasses, particularly Android-based wearables. A camera-based tracking solution would afford hardware makers the luxury of omitting a dedicated depth sensor.

Hopefully, the AR hardware industry is thinking along these very lines, which could mean better AR experiences even sooner than we think.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts