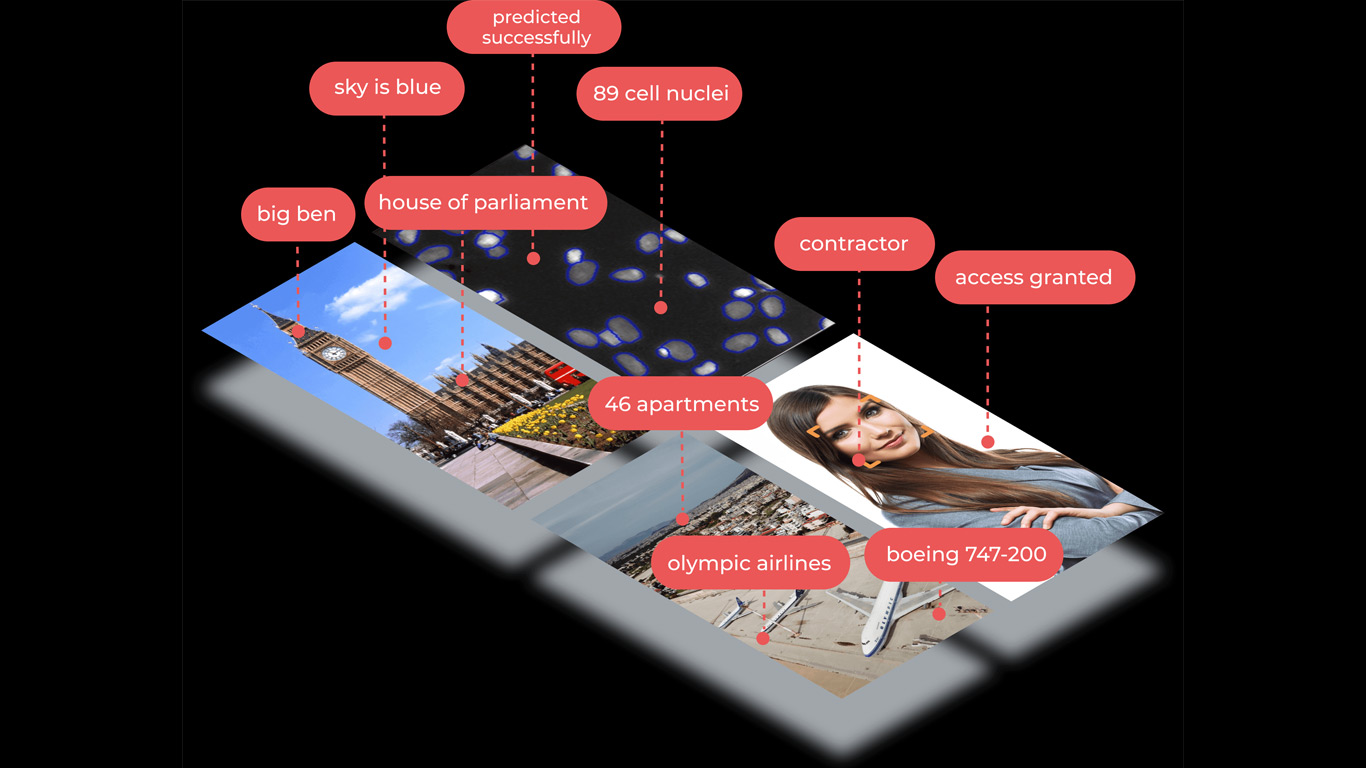

Chooch.ai hands-on: an interesting object detection SDK (for AR?)

Some days ago, I’ve seen a very intriguing video on Twitter by Robert Scoble about him trying an app that was able to understand what objects were in his surroundings in real-time. I was pretty intrigued, and I asked him what was this app, and he answered me that it was Chooch.ai.

Chooch.ai is an AI SDK for Android and iOS, that thanks to some machine learning magic, is able to see your surroundings and understand what there is around you in real-time. Do you want to know if it works well? I’ve made a cool video for you! Have a nice watching! There are some funny moments in it, so you can’t miss it 🙂

As you have seen in the video, the system is not perfect yet, mostly for these reasons:

- The detection has a slight-delay of 1-2 seconds, that is not critical per-se, but it may be a problem because the user in the meantime could have changed its points of view, framing new things, leading to an error in the interpretation of what there is around him;

- Some detections are completely wrong or hilarious: at the beginning of my experiments, I framed some chairs and a table, and the algorithm saw a war drone! While I was on the bus, it interpreted a pipe as a thermonuclear bomb! :O

- There is too much clutter: the system detects 4-5 elements each time, even if you’re framing only one. So maybe one of the detection is correct, but the other ones are wrong or inexact. For instance, when I framed a table, it detected a dining table (that was almost correct), plus people eating (and that was completely wrong). I guess the ML system had seen often people eating around a dining table, and so he mistakenly detected them. It should be more precise.

But at the same time, I was pretty intrigued by the fact that the system more or less always worked. I tested it in the office, and also outdoor at evening, while returning home from work. In both these difficult conditions (clutter, shaking phone, bad lighting, etc…), it kept working somehow. It was very cool.

And it is important that even if sometimes it returned completely weird results, most of the times it understood at least the context the phone was in (e.g. an office vs a street). It never gave me nonsense results. Reading the words on the screen, it was always possible to understand where I was in.

The fact that it could give acceptable results even in difficult conditions is an index that the solution is good, but just need some time to be refined.

I guess that I will consider this SDK for my AR consultancy job. The performances are good, and I see the power of using it in an augmented reality application, so that to make some augmentations appear depending on the context the user is in (e.g. detecting if he is in an office vs in the kitchen). It could also be the foundation for making some kind of AR assistant. Of course, the detected data needs some filtering, and personally I would wait for every detection to be considered valid for various frames before accepting it as valid. The prices are also acceptable, and Chooch.ai is also free to use for individual developers (for testing).

If you are interested, head to Chooch.AI and test it by yourself! After that, please let me know your impressions in the comments section or contacting me on my social media channels!

(Header image by Chooch.ai)

Disclaimer: this blog contains advertisement and affiliate links to sustain itself. If you click on an affiliate link, I'll be very happy because I'll earn a small commission on your purchase. You can find my boring full disclosure here.