Now that we've had a chance to jump into the Lumin SDK documentation at Magic Leap's Creator Portal, we now have much more detail about how the device will function and utilize software than any single piece of content released by Magic Leap to date.

So now that much of the mystery (at least on the software side) has been dispelled, join us on the magical journey into the heart of spatial computing.

Lumin OS: We knew that the operating system would be called Lumin. We now know that it is derived from Linux and the Android Open Source Project (AOSP). Applications will run on Lumin based on Magic Leap's own Lumin Runtime engine as well as common 3D engines like Unity and Unreal Engine 4.

Lumin Runtime: While apps built on other engines operate as immersive apps that run alone, Lumin Runtime allows apps to run and interact at the same time, displayed in a "single coherent experience." Developers can access 13 platform APIs to interact with a scene graph (which defines the coordinates and hierarchy of the space), collision physics, hand gestures, and real-time spatial computing technology for rendering objects, controlling, lighting and shadows, and applying real world occlusion. Multiple apps in a scene sounds a lot like the "Waking Up with Mixed Reality" demo from 2016 (embedded below).

Platform APIs: The SDK release notes includes a couple of eye-popping APIs. An Eye Tracking API can be used to identify the user's fixation point position, eye centers, and detect blinks. The SDK also supports hardware occlusion with an interface that feeds depth data to the platform.

Headpose: The Magic Leap One (ML1) combines data collected from its array of sensors along with visual observations. If visual observations are impacted by low light or occluded cameras, the ML1 falls back on rotation-only tracking until such time as the system obtains sufficient visual data.

Image Tracking: The ML1 continuously captures visual data to construct a map of the environment, which it recognizes when the device returns to an area that was previously mapped and restores content from previous sessions. So those droids you were looking for should still be in the room where you last saw them.

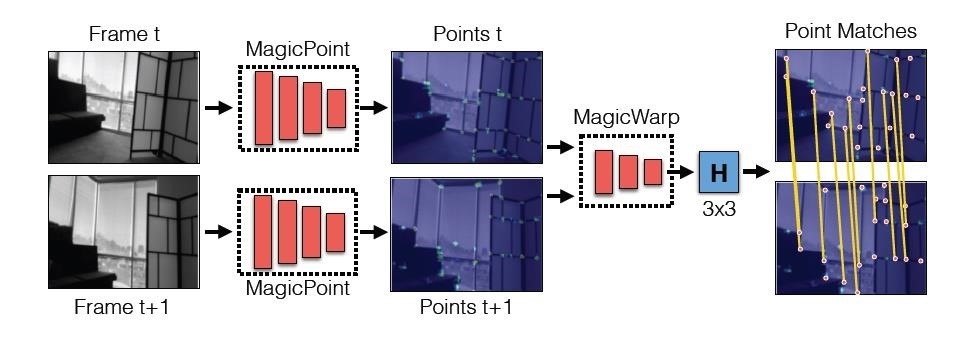

The technology sounds very similar to research published by Magic Leap engineers regarding the use of a pair of neural networks to identify "SLAM-ready" points on images for tracking, as illustrated below.

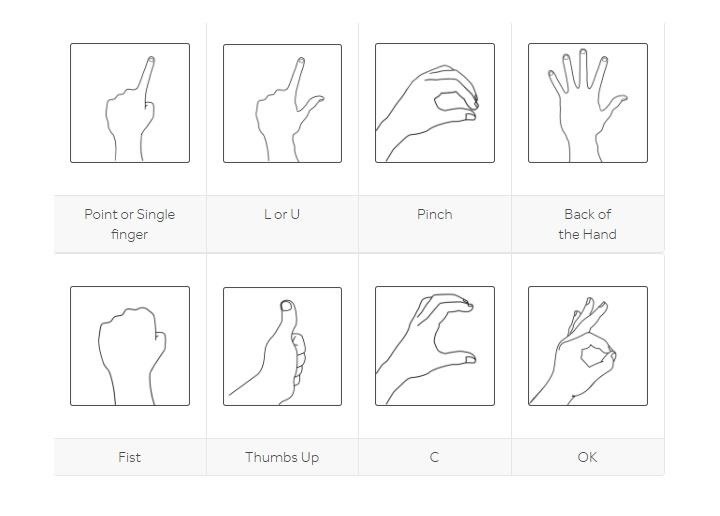

Gestures: Lumin SDK supports eight hand gestures. Meanwhile, the Microsoft HoloLens supports three gestures (with a fourth arriving via the Mixed Reality Toolkit), while the Meta 2 revolves around seven variations of its grab gesture.

Considering the recently-uncovered patent application for translating sign language, we can expect Magic Leap to continue adding to its gesture recognition repertoire.

Soundfield Audio: Just last week, a patent application filed by Magic Leap for spatialized audio surfaced via the US Patent and Trademark Office, which, as we observed, sounded a lot like the Soundfield Audio feature listed for the ML1.

Now we know that Magic Leap's proprietary technology will be available to developers. A spatial audio solution based on the Soundfield Audio technology will be available to Unity and Unreal Engine 4 in a future release, while a Soundfield API will be available for native C++ applications and Magic Leap's own Lumin Runtime engine in the near future. In addition, Magic Leap intends to extend support to audio middleware Wwise and FMOD Studio shortly after this week's Game Developer's Conference. In the meantime, developers can apply the SDKs from Google's Resonance Audio project.

Lighting: Lumin OS is capable of ambient light tracking. Apps can add light to a scene, but they cannot render the absence of light. However, developers can offset dark colors with bright colors to create black or darkness via illusion. Also, since objects won't cast shadows, developers will need to either light objects from above or omit lighting. Self-shadowing of virtual objects is supported.

Near Clipping Plane: While Magic Leap recommends keeping content at an arm's length for the best experience, developers are also cautioned against placing objects within 30 centimeters for extended periods of time. Magic Leap also encourages developers to fade objects when users get too close rather than abruptly clipping the view, as HoloLens developers are accustomed to doing.

In conclusion, developing for Magic Leap should be fairly familiar to those who are accustomed to developing in Unity, with some added tricks like eye tracking. It will be particularly interesting to see how developers take advantage of the Lumin Runtime engine.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

Be the First to Comment

Share Your Thoughts