Eye-tracking is an important technology that many would consider a ‘must have’ for the next generation of VR headsets, although that’s just one step towards resolving a long-standing issue in VR that prevents users from seeing the virtual world like they would naturally in the physical world. It’s called the vergence-accommodation conflict (detailed below), and Singapore-base Lemnis Technologies’ latest varifocal prototype aims to provide headset manufacturers with the software and hardware to help make it an issue of the past.

If you’re already well versed in the vergence-accommodation conflict, keep reading. We’ve summarized what’s at stake with varifocal displays at the bottom of the article.

‘Verifocal’ VR Kit by Lemnis

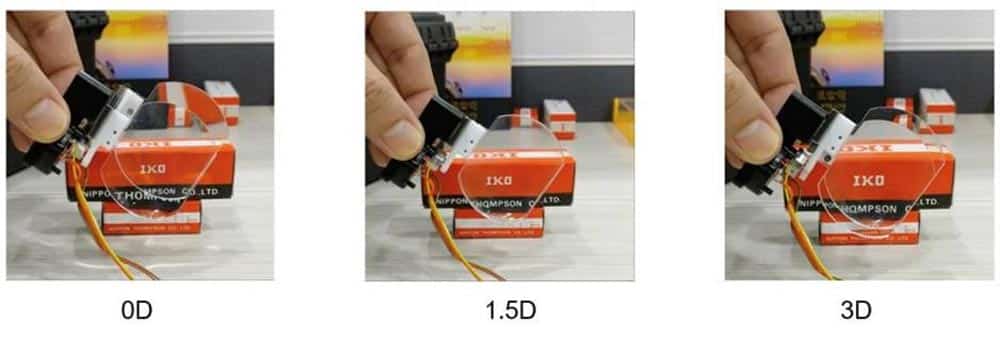

Unlike Oculus’ recently teased varifocal headset prototype, which couples eye-tracking with a moving display that physically adjusts to your eye’s focus, Lemnis’ latest prototype in their ‘Verifocal’ platform is based on an optic following an Alvarez lens design; an optical system invented in the ’60s by physicist Luis Alvarez which combines two adjustable lenses that shift to serve up a wide range of focal planes.

This too requires eye-tracking, but shifts the onus from the display and onto the lenses themselves to dynamically adjust to the user’s focus. Lemnis has also developed prototypes featuring adjustable displays.

German VR publication MIXED (German) sat down with Lemnis Technologies co-founder Pierre-Yves Laffont to learn more about the commercial-focused platform, which hopes to provide headset manufacturers with ready-made software and hardware solutions—the company’s ‘Verifocal’ VR kit prototype, built into a Windows VR headset, appears to fit into a pretty standard form-factor.

Laffont says the new Verifocal prototype can move the focal plane continuously depending on where the user is looking, and provides a focal range between 25 cm (~10 inches) to infinity.

“There is no fixed number of focal planes, compared to Magic Leap for example who has only 2,” Laffont explains. “The result is a smooth change of focus that can cover the whole accommodation range.”

To accomplish this, the company’s varifocal software engine analyzes the scene, uses the eye tracker output and the user’s eyeglasses prescription to estimate the optimal focus. It then instructs the adaptive optics to adjust the focus and correct the distortions synchronously.

When asked why Lemnis is exploring Alvarez optics as the next area of development, Laffont told MIXED this:

“Moving the screen is conceptually simple, mechanically it can be put in place in the short-term. Other approaches such as Alvarez lenses can further improve the optical quality. For AR, where the vergence-accommodation conflict is even more critical, liquid lenses are promising and there is a lot of work going in this direction. Our strength at Lemnis is that our Verifocal platform is built to work with all of those approaches – and we have built the expertise and processes to integrate any of them into a partner’s headset.”

Lemnis is initially targeting the enterprise segment with their tech, “where quality matters most and price is less sensitive.” Laffont believes the VR market will eventually reach a critical scale that will allow manufacturing prices to come down, and varifocal displays will likely become commonplace in most VR headsets.

Lemnis Technologies was founded in 2017 by scientists and engineers from academic institutions including MIT, Brown, ETH Zurich, Inria, NUS, NTU, KAIST, and companies such as Disney Research, Philips, NEC.

The ‘Verifocal’ prototype and the associated varifocal platform have been announced as an honoree of the CES 2019 Innovation Award.

Vergence-Accommodation Conflict

Outside of a headset, the muscles in your eye automatically change the shape of their lens to bend the light to your retina so it appears in focus. Simultaneously, both eyes converge on whatever real-world object you’re focusing on to create a single picture in your brain.

In short, the vergence-accommodation conflict is a physical phenomenon that occurs when the headset’s display presents light at a static distance from your eye, making your eyes strain to resolve whatever virtual object you happen to be looking at.

In a headset with a fixed-focus display or optics, you’re basically left with an uncomfortable mismatch that goes against ingrained muscle memory developed over the course of your entire life; you see an object rendered a few feet away, your eyes converge on the object, but your eye’s lenses never change shape since the light is always coming from a static source. You can read more about vergence-accommodation conflict and more eye-tracking related stuff in our extensive editorial on why eye-tracking is a game changer for VR.