Lytro, a leading light-field company, is positioning its light-field capture and playback technology as the ideal format for immersive content. The company is building a toolset for capturing, rendering, and intermingling both synthetic and live-action light-field experiences which can then be delivered at the highest quality playback supported by each individual platform.

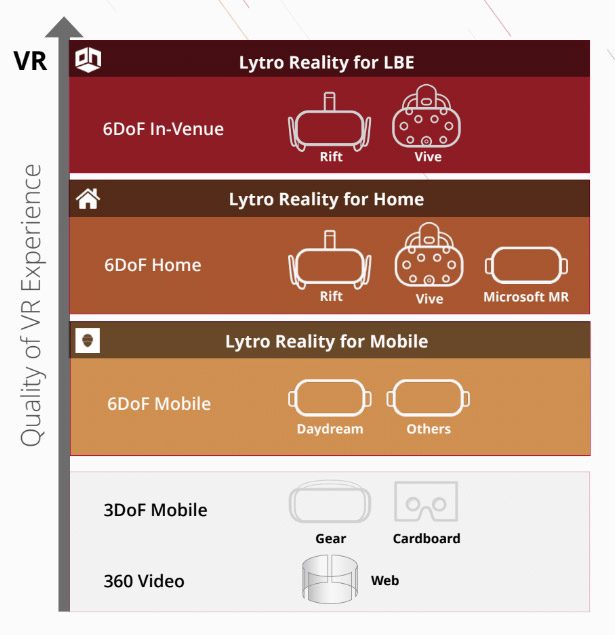

Speaking with Lytro CEO Jason Rosenthal at the company’s Silicon Valley office, I got the rundown of how the company aims to deploy its tech toolset to create what he calls the Lytro Reality Experience, immersive experiences stored as light-fields and then delivered for the specific quality and performance capabilities of each consumption end point—all the way from the highest-end VR headset at a VR arcade down to mobile 360 footage viewed through a smartphone.

Light-fields are pre-computed scenes which can recreate the view from any point within the captured or rendered volume. In short, that means that a light-field can be played back as scenes which exceed the graphical capabilities of real-time rendering while still retaining immersive 6DOF positional tracking and (to an extent) interactivity. Though not without its own challenges, light-fields aim to combine the best of real-time immersion with the visual quality of pre-rendered VR experiences.

Rosenthal made the point that revolutions in media require new content formats with new capabilities. He pointed to the PDF, OpenGL, http, and MPEG as examples of media formats which have drastically altered the way we make and consume information. Immersive media, Rosenthal says, requires a volumetric format.

To that end, Lytro has been building a complete pipeline for light-fields, including capture/rendering of light-field content, mastering, delivery, and playback. He says that the benefit of this approach is that creators can capture/render and master their content once, and then distribute to headsets and platforms of varying capabilities without having to recapture, recreate, or remaster the content for each platform, as presently needs to be done for most real-time content spanning desktop and mobile VR headsets.

There’s three main pieces of Lytro’s toolset that makes it all possible. First is the company’s light-field camera, Immerge, which enables high-quality live-action light-field capture; we recently detailed its latest advancements here. Then there’s the company’s Volume Tracer software which renders synthetic light-fields from CG content. And finally there’s the company’s playback software which aims to enable the highest-fidelity playback on each device.

For example, a creator could create a high-fidelity CGI scene like One Morning—a Lytro Reality Experience which the company recently revealed—with their favorite industry-standard rendering and animation tools, and then output that experience as a Lytro Reality Experience which can be deployed across high-end, low-end, and even 360 video without needing to modify the source content for the specific capabilities of each device, and without giving up the graphical quality of raytraced, pre-rendered content.

Lytro is keeping its tools close to the chest for now; the company is working one on one with select customers to release more Lytro Reality Experiences, and encourages anyone interested to get in touch.

I’ve seen a number of the company’s latest Lytro Reality Experiences as played back along the spectrum of devices (like Hallelujah), from high-end desktops suitable only for out-of-home VR arcades, all the way down to 360 playback on an iPad. The idea is to maximize the fidelity and experience to the greatest degree that each device can support. On the high-end desktop, as seen through a VR headset, that means maximum quality imagery generated on the fly from the light-field dataset with 6DOF tracking. For less capable computers or mobile headsets, the same scene would be represented as baked-down 3D geometry, while mobile devices would get a high quality 360 video rendered at up to 10K resolution—all using the same pre-rendered source assets.

– – — – –

The appeal of the light-field approach is certainly clear, especially for creators seeking to make narrative experiences that go above and beyond what’s possible to be rendered in real-time, even with top of the line hardware.

Since light-fields are pre-rendered however, they can’t be interactive in the same way that traditional real-time rendering can be. Rosenthal acknowledges the limitation and says that Lytro is soon to debut integrations with leading game engines which will make it easy to mix and match light-field and real-time content in a single experience—a capability which opens up some very interesting possibilities for the future of VR content.

For all of the interesting potential of light-fields, one persistent hurdle has hampered their adoption: file sizes. Even small light-field scenes can constitute huge amounts of data, so much that it becomes challenging to deliver experiences to customers without resorting to massive static file downloads. Lytro is well aware of the challenge and has been aggressively working on solutions to reduce data sizes. While Rosenthal says the company is still working on reining in light-field file sizes, the company provided Road to VR with a fresh look at the their current data envelopes for each consumption end-point:

- 0.5TB/minute for 6DoF in-venue

- 2.7GB/minute for in-home desktop

- 2.5GB/minute for tablet/mobile devices

- 9.8MB/minute for 360 omnistereo

The above is all based on the company’s Hallelujah experience, as optimized for each consumption end-point. Think these numbers are scary? They were much higher not that long ago. Lytro has also teased “interesting work” still in development which it claims will reduce the above figures by some 75%.

Despite Lytro’s vision and growing toolset, and an ongoing effort to battle file sizes into submission, there’s still no publicly available demo of their technology that can be seen through your own headset. Fortunately the company expects that the first public Lytro Reality Experience from one of their partners will launch to the public starting in Q1 2018.